The State of Darkness 2.0

Year 2023

Enactive co-presence experience in VR

CO-PRESENCE WITH THE OTHER SOMEWHERE IN THE FUTURE

CONCEPT

The State of Darkness is a virtual reality installation in which human and non-human lives coexist. The first is lived by the participant while the latter is lived by the non-human Other. The narrative VR system is enactive, this is, all elements of the narrative space are in a reciprocally dependent state with the other elements.

The participant’s experiential moves are interpreted from their biosensor measurements in real-time, and then fed back to drive the different elements of the enactive narrative system. In turn, the facial and bodily behaviour of the artificial Other feeds back to the participant’s experiential states. The scenography and the soundscape adapt to the behaviours of the two beings while these adaptations affect back to the atmosphere of the intimate co-presence between the human and the Other.

The concept of non-human narrative allows the State of Darkness 2.0 to reflect the human-centric perspective against that of a non-human perspective. The intriguing question is whether narratives and the narrative faculty should be considered as exclusively characteristic to humans, or if the idea of narrative can be extended to other domains of life, or even to the domain of artificially humanlike beings..

STORY

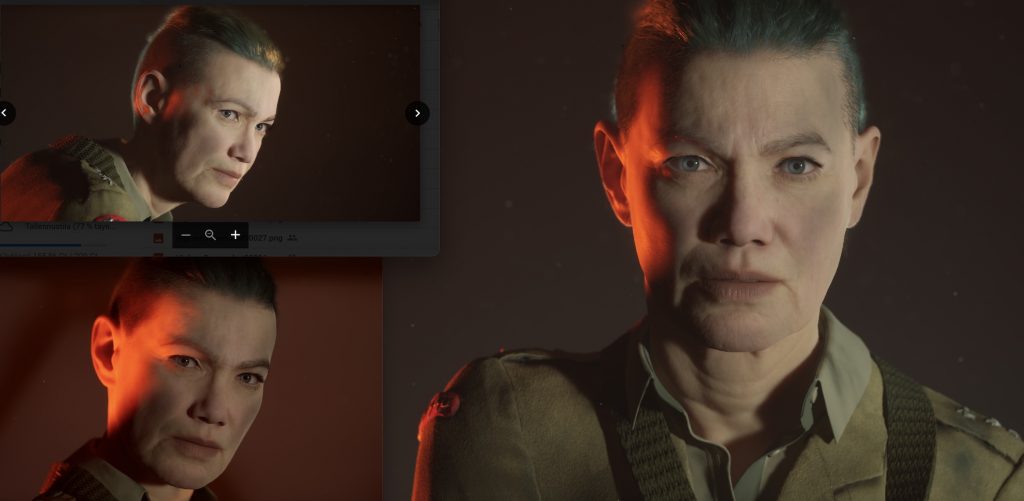

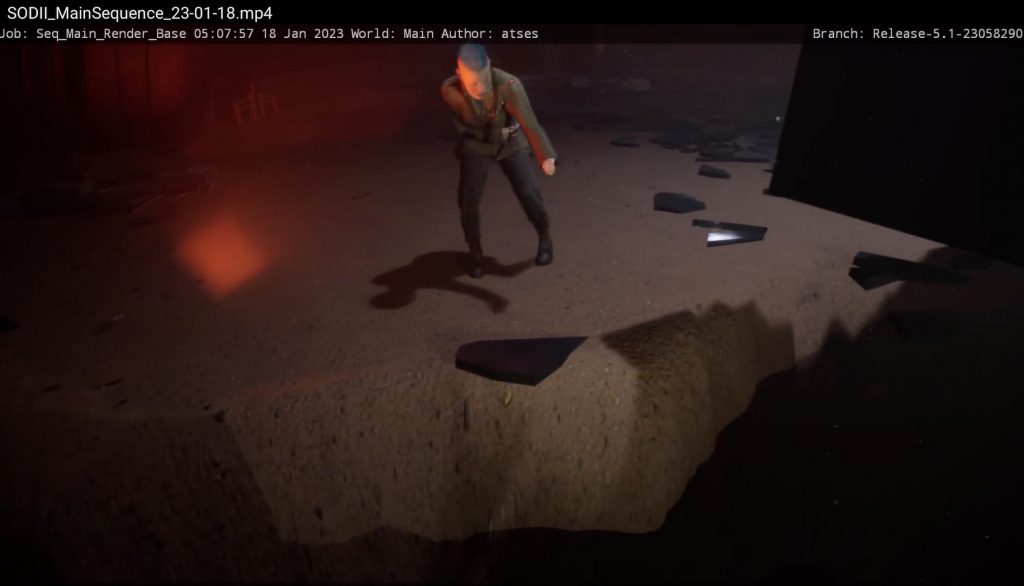

We are somewhere in the future, not sure if it is the Earth year 2200 or on some exoplanet year 3200. The participant and the Other meet face-to-face in an abandoned departure hall dome at the edge of the human-build world, threatened by furious powers of the nature outside the dome. What could be the story of the Other, a sole survivor, or a dutiful officer? While no words are exchanged, the eyes tell everything that the participant needs to know — and vice verse. The Other observes the participant’s experiential states, be it anxiety, curiosity, fear, or empathy… During the co-presence that lasts around 6 minutes, the participant will be invited to reflect and feel for the Other in the ambiguous situation. The surrounding space is gradually grumbling down, its destruction adapting to the intensity of the facial interaction between the two beings. What could their relationship turn into, if they were given more time?

Example of the SOD 2.0. interactive music effect for co-presence

Images above: Screenshots from the UE low res render

Technical information

- The set-up takes 4-5 hours, to take it down 1-1,5 hours

- On the no-darkness to darkness scale from 1 to 10, the project prefers 9 darkness

- The project uses headphones and does not emit external sounds (very silent)

- One person at the time can experience the installation, however, others may watch the images projected on the screen (note, the sounds may be also added to the projection, making the installation a bit louder)

- Duration is 8 minutes plus experiencer set up (biosensors, HDM) takes 2-3 minutes

The set-up image: The set-up is similar to the team’s previous SOD 1.0 (2018) installation, a a seated VR experiencer using HMD in the State of Darkness 1.0 with 1-2 large screen projections for the external viewers.

The plan for presentation & space equipment:

- Dark, silent space 3m x 3m

- 3 walls (optimally)

- 1 spotlight to light the desk

- 1 table, 2 chairs

- Screen projection possibility, large flat screen or optionally about 2 m wide wall

- Projector

- Gaming computer / laptop w/ Unreal Engine installed

- Noise-cancelling headphone with long cord

- VR headset with eye-tracker

- 2 biosensors to measure Heart rate (HR) and Electrodermal activity (EDA)

- Multiple electricity outlets

- Electrical power 3-5 kW

- Internet connection needed

SOD 2.0 TEAM:

Pia Tikka is the Head of Enactive Virtuality Lab, director-producer.

Tanja Bastamow is virtual scenographer working with experimental projects combining scenography with VR and AR. Her key areas of interest are immersive virtual environments, and emergent spatial narratives in which human and non-human elements can mix in unexpected ways. Currently, she is a doctoral candidate at Aalto University’s, doing research on emergent virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as virtual designer. She is a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

Matias Harju is a Finnish sound designer and musician specialising in immersive and interactive sonic storytelling. His main job is developing audio augmented reality with six-degrees-of-freedom as a narrative medium at WHS Theatre Union in Helsinki. He is also active in making immersive sound design and adaptive music for other projects including virtual reality, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University.

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Ilkka Kosunen has been working on physiological computing since 2006 and is an expert in building real-time adaptive systems based on psychophysiological signals such as EDA, EMG, EEG, ECG, EOG, fNIRS, PPG, as well as magneto-and accelerometers, eye-trackers, depth-cameras, microphones, and light sensors to create emotionally adaptive games, adaptive virtual commercials, dance performances, and emotionally adaptive VR experiences. He has received Ph.D. in Computer Science and published over 40 scientific articles on the topic of physiological and affective computing.

Abdallah Sham has graduated with his master’s degree in Robotics and Computer Engineering at the University of Tartu, Estonia. His doctoral studies at the Digital Technology Institute, Tallinn University (2020-2023) is focusing on the field of Artificial Intelligence (AI), Virtual Reality (VR), and Machine Learning (ML). His work is concentrating on Deep Neural Network models that can be used to analyze Human Facial Expressions in face-to-face communication (dyadic interaction) with virtual characters in socially relevant manner.

Eeva R. Tikka is a screenwriter, author, and librettist. She is working as script consultant and dramaturgist with over 800 consultations made for regular customers, film production companies and writers (screenplays, TV-series scripts, theatrical plays, other audio visual material). She is also a 3D artist and animator – Movie Hybrid Stories (Audiovisual Graphic novels) and CEO Publisher-Editor (Gate of Stories).