Enactive Virtuality Lab present the most recent work by the team members.

The event takes place in Tallinn University, Nova building N-406 Kinosaal

Date: 24.11.2022. 14:00-18:00 (EET / CET +1)

Join Zoom Meeting https://zoom.us/j/95032524868

Speakers and Schedule, see below.

SPEAKERS

Tanja Bastamow: Virtual scenography in transformation

The performative possibilities of virtual environments

Scenography has historically been understood as the illustrative support for a staged drama. However, in the recent decades, the field has expanded to encompass the overall design of performance events, actions and experiences. Positioning this expanded understanding of scenography in dialogue with virtual reality (VR) opens up interesting possibilities for designing virtual scenography which can become an enabler of emerging narratives, unforeseen events and unexpected encounters. In my research I investigate the question of shifting agencies and virtual scenographers’ role as a co-creator rather than a traditional author-designer. Who – and what – become the creators, performers and spectators in virtual experiences and encounters in which the real-time responsiveness, transformability, (im)materiality and immersivity of virtual scenography play a key role? In my talk I will introduce thoughts around these questions by describing the process of building the virtual scenography for “State of Darkness II” VR experience.

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

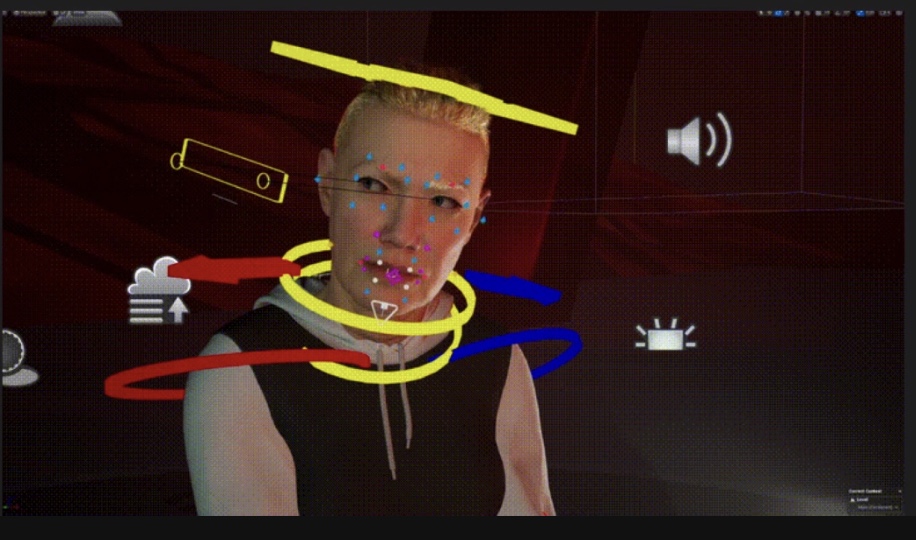

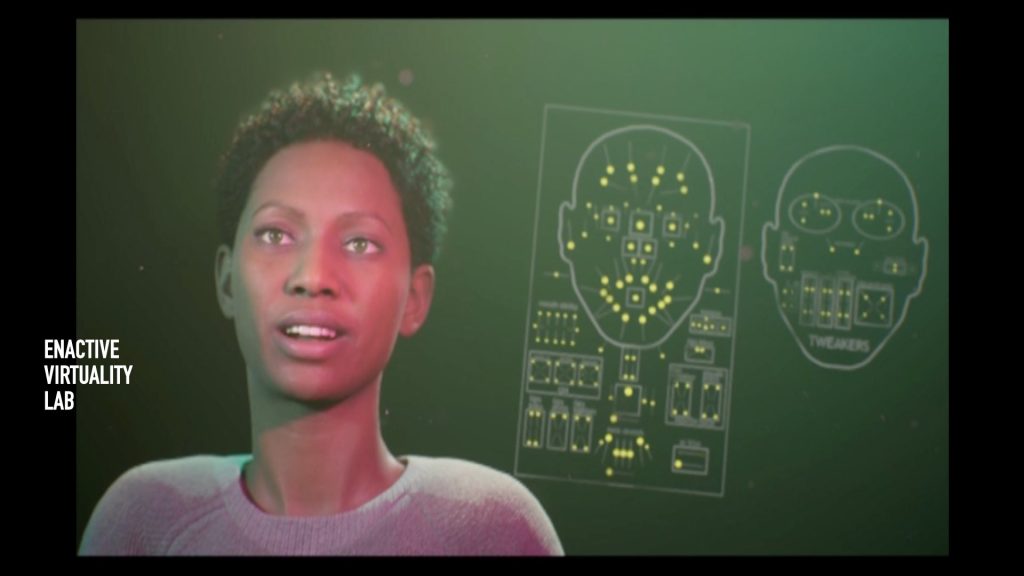

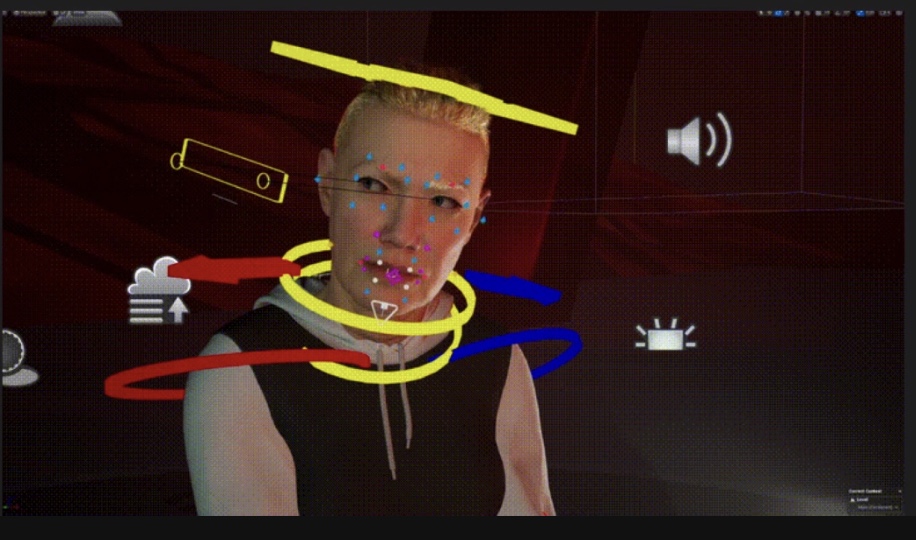

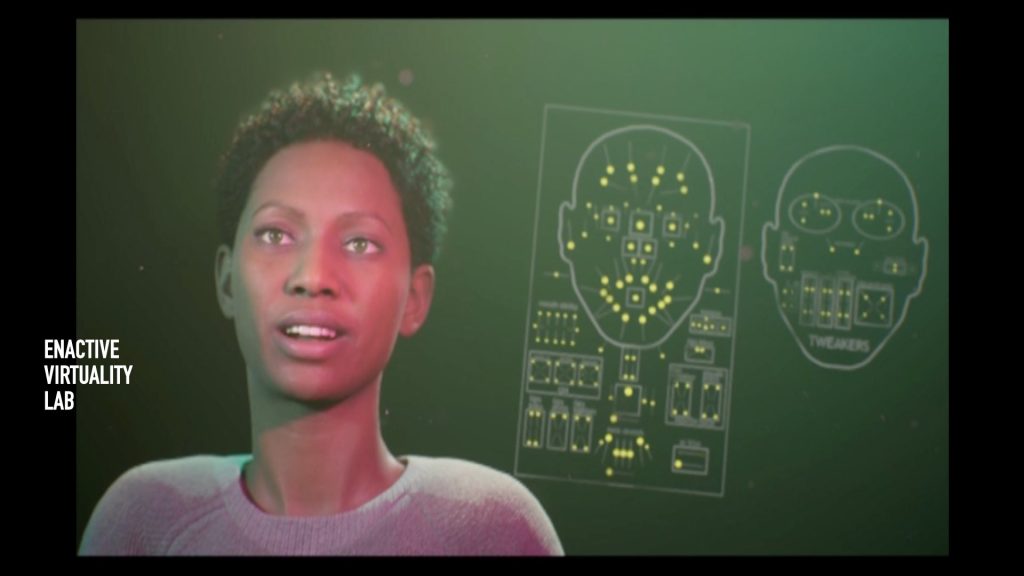

Ats Kurvet: DESIGNING VIRTUAL characters

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Matias Harju: Traits of Virtual Reality Sound Design

Whilst sound design for VR borrows a lot from other media such as video games and films, VR sets some unique conditions for sonic thinking and technical approaches. Spatial sound is inarguably one of the most significant areas in this respect. That entails the whole process from narrative decisions to source material recordings to 3D audio rendering on the headphones. Other sound design considerations in VR may relate to the level of sonic realism and the role of sound in general. My talk will briefly expose some of the characteristics of sound in VR from both artistic and technical perspectives.

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

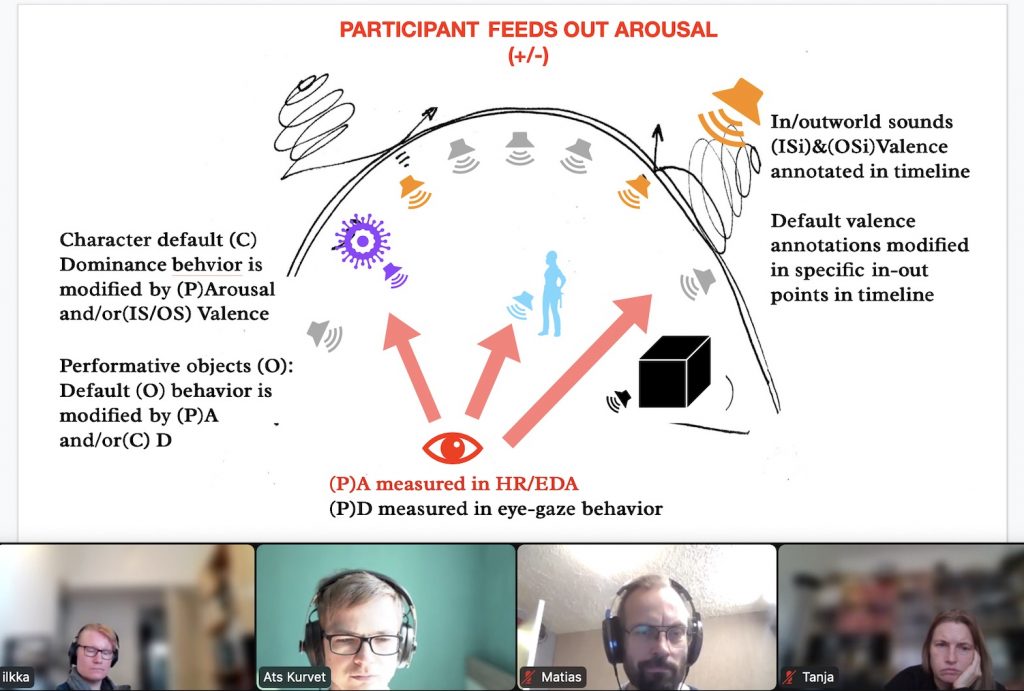

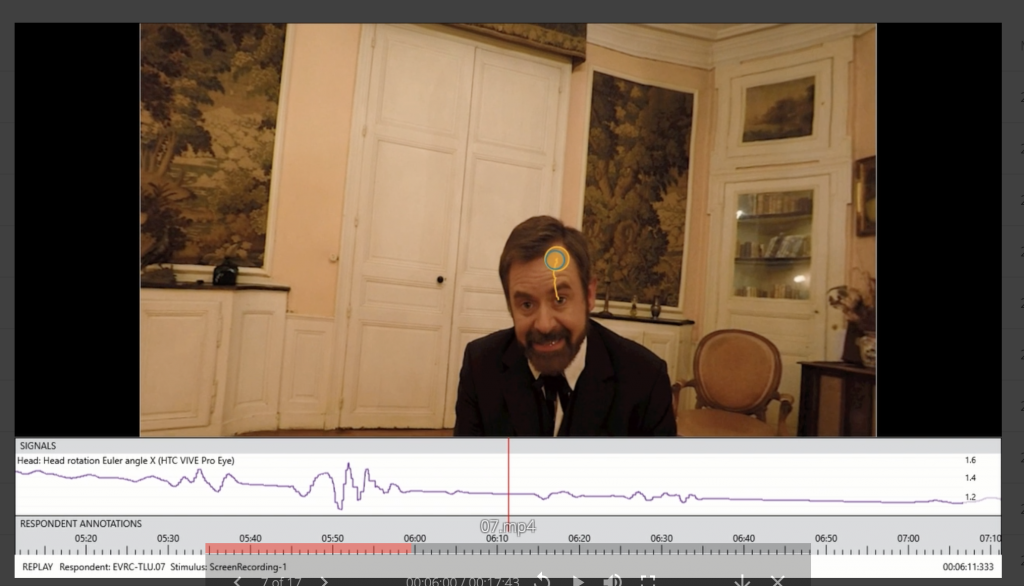

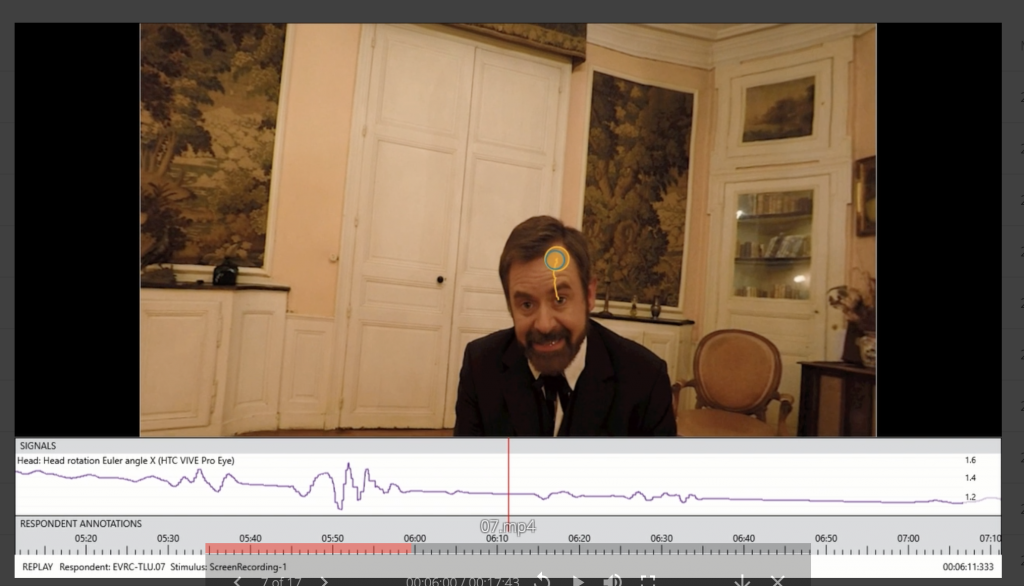

MARIE-LAURE CAZIN:Freud’s last hypnosis, validating emotion driven enactions in cinematic VR

Dr Marie-Laure Cazin will present the study she has been conducting in the context of her European Mobilitas + postdoctoral grant with Enactive Virtuality Lab BFM, TLU (2021-2022). She is currently developing an artistic prototype of Emotive VR for interactive 360° film. The project aims to obtain emotional interactivity of the viewer with the film’s soundtrack. While experiencing her VR film, Freud’s last hypnosis, the emotional responses of the viewers are collected with physio-sensors, eye-tracking and with personal interviews after the viewing. Project is in collaboration with Dr Mati Mõttus from the Interaction lab of the school of Digital Technology (TLU) and Matias Harju, composer and sound designer (Finland).

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”[1] in 2020. This thesis is about cinema experience, contextualized by neurosciences research, describing the neuronal process of emotions and trying to think further about the analogy between cinema and the thinking process. She is also a regular teacher in the Ecole Supérieure d’Art et de Design TALM, le Mans (France).

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”[1] in 2020. This thesis is about cinema experience, contextualized by neurosciences research, describing the neuronal process of emotions and trying to think further about the analogy between cinema and the thinking process. She is also a regular teacher in the Ecole Supérieure d’Art et de Design TALM, le Mans (France).

She comes from an artistic background, having studied in Le Fresnoy, Studio National des Arts Contemporains, the Jan van Eyck Academy, post graduate program in residency (Masstricht, Netherlands) and in the Ecole Nationale Supérieure des Beaux-Arts in Paris. She has conducted many art-science projects together with scientific partners that have been shown in many exhibitions and festivals, creating prototypes that renew the filmic experience. In her Emotive Cinema (2014) and Emotive VR (2020) projects she applies physiological feedback, like EEG, in order to obtain an emotional analysis from the brains’ activity of the viewers that changes the film’s scenario.

[1]Cazin, M-L. 2020. Cinéma et neurosciences : du cinéma émotif à emotive VR. Accèss https://www.theses.fr/2020AIXM0009

MATI MÕTTUS: Psyhco-physiology and eye-tracking of CINEMATIC VR

There are various ways to track our emotions through physiometric signals. Most common of these signals are electrical conductance of skin, facial expressions, cardiography and encephalography. In my talk I’d like to discuss the reliability and intrusiveness of physiological measurements in interactive art. While the reliability of measurements is not too critical in the domain of art, the intrusive sensors over art-enjoyers’ bodies can easily spoil the artistic experience.

Mati Mõttus is a lecturer and researcher in the School of Digital Technologies, Tallinn University. His doctoral degree on computer science in the field of human-computer interaction focused on “Aesthetics in Interaction Design” (2018). The current research interests are hedonic experiences in human-computer interaction. The focus is two-fold. The use of psycho-physiological signals in detecting users’ feelings while interacting with technology and explaining emotional behavior on one hand. On the other hand, the design of interactive systems, based on psycho-physiological loops.

Robert McNamara: Empathic nuances with virtual avatars – a novel theory of compassion

Technological mediation is the idea that technology affects or changes us as we use it, either consciously or unconsciously. Digital avatars, whether encountered in VR or otherwise, are one such technology, often in concert with various machine learning applications, that pose potential unknown and understudied interactional effects on humans, whether through their use in art, video games, or governmental applications (such as the pilot program at EU borders which was used to detect traveler deception, iborderctrl). One area where avatars may pose particular interest for academic study is in their perceived ability to not only evoke the uncanny valley effect, but also in their potential to promote a “distancing effect.” It is hypothesized that interaction with avatars, as an artistic process, may also result in increased expressions of cognitive empathy through the conscious or unconscious process of abductive reasoning.

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,

DEBORA C.F. DE SOUZA: SELF-RAting of Emotions in simulated immigration interview

Humans benefit from emotional interchange as a source of information to adapt and react to external stimuli and navigate their reality. Computers, on the other hand, rely on classification methods. It uses models to calculate and differentiate affective information from other human inputs because of the emotional expressions that emerge through human body responses, language, and behavior changes. Nevertheless, theoretically and methodologically, emotion is a challenging topic to address in Human-Computer Interaction. During her master’s studies, Debora explored methods for assessing physiological responses to emotional experience and aiding the emotion recognition features of Intelligent Virtual Agents (IVAs). Her study developed an interface prototype for emotion elicitation and simultaneous acquisition of the user’s physiological and self-reported emotional data.

Debora C. F. de Souza is a Brazilian visual artist and journalist. Graduated in Social Communication at the University of Mato Grosso do Sul Foundation in Brazil. Her artwork and research are marked by experiments with different kinds of images and audiovisual media. Recently graduated with an MA in Human-Computer Interaction (HCI); she is now a doctoral student at the Information Society Technologies and a Junior Researcher at the School of Digital Technologies at Tallinn University. In the Enactive Virtuality Lab, she is researching the implications of anthropomorphic virtual agents on the human affective states and the implications of such interactions in collaborative and social contexts, such as medical simulation training.

SCHEDULE 14-18

14:00-14:10 Pia Tikka: State of Darkness (S0D)- Enactive VR experience

14:10-14:45 Tanja Bastamow – Designing performative scenography (SoD)

14:45-15:00 Ats Kurvet –Designing humanlike characters (SoD)

15:00-15:20 Matias Harju – Traits of Virtual Reality Sound Design

15:20-15:40 Marie-Laure Cazin:Freud’s last hypnosis, validating emotion driven enactions in cinematic VR

15:40-16:00 Mati Mōttus: Experimenting with Emotive VR cinema

Break (10 min)

16:15-17:15 Robert McNamara: Empathic nuances with virtual avatars: a novel theory of compassion.

17:15-17:35 Debora Souza: Self-rating of emotions in simulated immigration interview

17:35- 18:00 Discussion

****

BFM PhD is inviting you to a scheduled Zoom meeting.

Topic: Enactive Virtuality Lab hybrid seminar

Time: Nov 24, 2022 10:00 Helsinki

Join Zoom Meeting

https://zoom.us/j/95032524868

Meeting ID: 950 3252 4868

One tap mobile

+3726601699,,95032524868# Estonia

+3728801188,,95032524868# Estonia

Dial by your location

+372 660 1699 Estonia

+372 880 1188 Estonia

+1 346 248 7799 US (Houston)

+1 360 209 5623 US

+1 386 347 5053 US

+1 507 473 4847 US

+1 564 217 2000 US

+1 646 558 8656 US (New York)

+1 646 931 3860 US

+1 669 444 9171 US

+1 669 900 9128 US (San Jose)

+1 689 278 1000 US

+1 719 359 4580 US

+1 253 205 0468 US

+1 253 215 8782 US (Tacoma)

+1 301 715 8592 US (Washington DC)

+1 305 224 1968 US

+1 309 205 3325 US

+1 312 626 6799 US (Chicago)

Meeting ID: 950 3252 4868

Find your local number: https://zoom.us/u/abUQJvmMJi

Join by Skype for Business

https://zoom.us/skype/95032524868

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,