Call for NEUROCINEMATIC papers – Baltic Screen Media Review, Special Issue 2023

Cinematic minds in making – Investigation of subjective and intersubjective experiences of storytelling

Guest editors Pia Tikka and Elen Lotman with Maarten Coëgnarts

Contact and submission to NeuroCineBFM@tlu.ee

One of the key foundations of everyday activities in society is intersubjectively shared communication between people. Stories, films, and other audiovisual narratives promote shared understanding of possible situations in other people’s lives. Narratives expose complex social situations with their ethical, political, and cultural contexts (Hjort and Nannicelli, 2022). They also play a role for the human kind as a means to learn from protagonists and their positive examples and successes, but also their mistakes, false motivations, and blinded desires that may lead to dramatic situations, sometimes even disasters. An exhaustive range of contextual situatedness as the constitutive essence in narratives does not only serve entertainment and education, but also scientific studies of human mind and behavior.

Since the beginning of this millennium, narratives mediated by films have allowed researchers to simulate complex socio-emotional events in behavioral and neuroimaging laboratories, accumulating new insights to the human behavior, emotion, and memory, to name a few of many topics. The proponents of the so-called naturalistic neuroscience and, in particular its subfield neurocinematics (Hasson et al. 2008) have shown how experiencing naturally unfolding events evokes synchronized activations in the large-scale brain networks across different test participants (see, Jaaskelainen et al. 2021, for review). The tightly framed contextual settings in cinematic narratives have opened a fresh window for researchers interested in understanding linkages between individual subjective experiences and intersubjectively shared experiences.

So far, neurocinematic studies have nearly exclusively focused on mapping the correlations between narrative events and observed physiological behaviors of uninitiated test participants.. The knowledge accumulated so far does not tell much of the affective or cognitive functions of the experts of audiovisual storytelling, with few exceptions (e.g. deBorst et al 2016; Andreu-Sánchez et al. 2021). Along with this Special Issue we want to extend the scope of studies to the embodied cognitive processes of storytellers themselves. As an example, consider the term “experiential heuristics” proposed by cinematographer ELen Lotman (2021) to describe the practice-based knowledge accumulation by cinematographers, or the embodied dynamics of the filmmaker in the process of simulating the experiences of the fictional protagonists and/or that of imagined viewers described as “enactive authorship”(Tikka 2022). Another question that merits further understanding is how the embodied decision-making processes of filmmakers further lead to the creation of dynamic embodied structures in the cinematic form. Appealing to the shared embodiment of both the filmmaker and the film viewer, these pre-conceptual patterns of bodily experience or “image schemas” have been argued to play a significant expressive role in the representation and communication of meaning in cinema (Coëgnarts 2019).

We call for papers that focus on the creative experiential processes of the filmmakers, storytelling experts and their audience. We encourage the proposed papers to discuss how temporally unfolding of contextual situatedness depicted in narratives manifests in reported subjective experiences, the observed body-brain behaviors, and time-locked content descriptions.

TOPICS

We invite boldly multidisciplinary papers to contribute with theoretical, conceptual and practical approaches to the experiential nature of filmmaking and viewing. They may draw, for instance, from social and cognitive sciences, psychophysiology, neurosciences, ecological psychology, affective computing, cognitive semantics, aesthetics, or empirical phenomenology.

Accordingly, we encourage papers that discuss the relations of data from these approaches, all concerning film experience of the film professionals and/or film viewers. The focus of a submission may also focus on a specific expertise, for example that of the writer, editor, cinematographer, scenographer or sound designer. The papers may describe, for example 1) subjective experiences, 2) intersubjectively shared experiences; 3) context or content annotation, 4) semantic description; 5) first-person phenomenal description, and/or 6) physiological observation (e.g. neuroimaging, eye-tracking, psychophysiological measures).

Contributions addressing topics such as (but not limited to) the following are particularly welcome:

- Social cognition and embodied intersubjectivity

- Interdisciplinary challenges for methods; annotations linking cinematic features to physiological data

- First-and second-person methodologies; empirical phenomenological observations

- Embodied enactive mind, embodied simulation, and theory of theory mind

- Embodied metaphors in film; embodied film style; bodily basis of shared film language; semantics;

- Cinematic empathy; emotions; simulation of character experiences

- Audience engagement; immersion; cognitive identification;

- Temporality of experiences; context-dependent memory coding; story reconstruction; narrative comprehension;

- Experiential heuristics; multisensory and tacit knowledge;

- Storytelling strategies, aesthetics, fIlm and media literacy; genre conventions

GUIDELINES

We will accept long research articles (4000 – 8000 words w/o ref) and short articles and commentaries (2000 – 2500 words w/o ref). Submitted papers need to follow Submission guidelines

All submissions should be sent via email attachment to Guest editors at NeuroCineBFM@tlu.ee

BSMR embraces visual storytelling, we thus invite authors to use photos and other illustrations as part of their contributions. See Journal info https://sciendo.com/journal/BSMR

Key dates

01.04.2023 – Submit abstracts of 200–300 words.

10.04.2023 –Acceptance of abstracts

30.06.2023 –Submit full manuscripts for blind peer review

20.09.2023 –Resubmit revisions

31.12.2023 –Special Issue published online

This issue of BSMR will be published both online and in print in December 2023.

All submissions should be sent via email attachment to Guest editors at NeuroCineBFM@tlu.ee

Guest editors

Maarten Coëgnarts https://www.filmeu.eu/alliance/people/maarten-coegnarts

Elen Lotman https://www.filmeu.eu/alliance/people/elen-lotman

Pia Tikka https://www.etis.ee/CV/Pia_Tikka/eng

References

Andreu-Sánchez, C., Martín-Pascual, M.A., Gruart, A. and Delgado-García, J.M. (2021). The effect of media professionalization on cognitive neurodynamics during audiovisual cuts. Frontiers in Systems Neuroscience, 15: 598383. doi: https://doi.org/10.3389/fnsys.2021.598383

de Borst, A. W., Valente, G., Jääskeläinen, I. P., & Tikka, P. (2016). Brain-based decoding of mentally imagined film clips and sounds reveals experience-based information patterns in film professionals. NeuroImage, 129, 428–438. https://doi.org/10.1016/j.neuroimage.2016.01.043

Hasson, U., Landesman, O., Knappmeyer, B. Vallines, I., Rubin, N., & Heeger, D. (2008). Neurocinematics: The Neuroscience of Film. Projections, 2, 1–26. https://doi.org/10.3167/proj.2008.020102.

Hjort, M and Nannicelli, T. (Ed.).(2022) The Wiley Blackwell Companion to Motion Pictures and Public Value. Wiley-Blackwell Press.

Jääskeläinen, I. P., Sams, M., Glerean, E., & Ahveninen, J. (2021). Movies and narratives as naturalistic stimuli in neuroimaging. NeuroImage, 224, 117445. https://doi.org/10.1016/j.neuroimage.2020.117445

Lotman, E. (2021) Experiential heuristics of fiction film cinematography. PhD Diss. Tallinn University.

Tikka, Pia (2022). Enactive Authorship Second-Order Simulation of the Viewer Experience— A Neurocinematic Approach. Projections: the Journal for Movies & Mind, 16 (1), 47−66. DOI: 10.3167/proj.2022.160104.

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

Tanja Bastamow is a virtual scenographer working with experimental projects combining scenography with virtual and mixed reality environments. Bastamow’s key areas of interest are immersive virtual environments, the creative potential of technology as a tool for designing emergent spatial narratives, and creating scenographic encounters in which human and non-human elements can mix in new and unexpected ways. Currently, she is a doctoral candidate at Aalto University’s Department of Film, Television and Scenography, doing research on the performative possibilities of real-time virtual environments. In addition to this, she is working in LiDiA – Live + Digital Audiences artistic research project (2021-23) as a virtual designer. She is also a founding member of Virtual Cinema Lab research group and has previously held the position of lecturer in digital design methods at Aalto University.

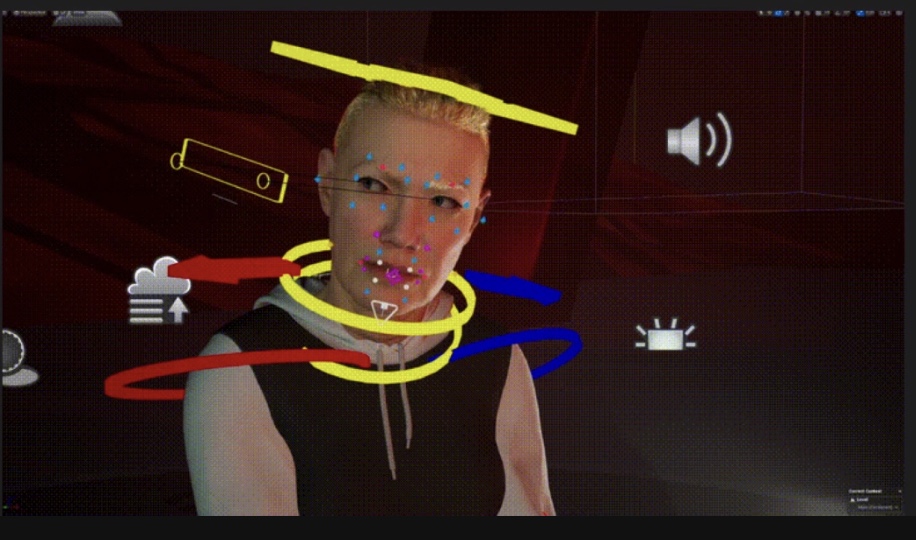

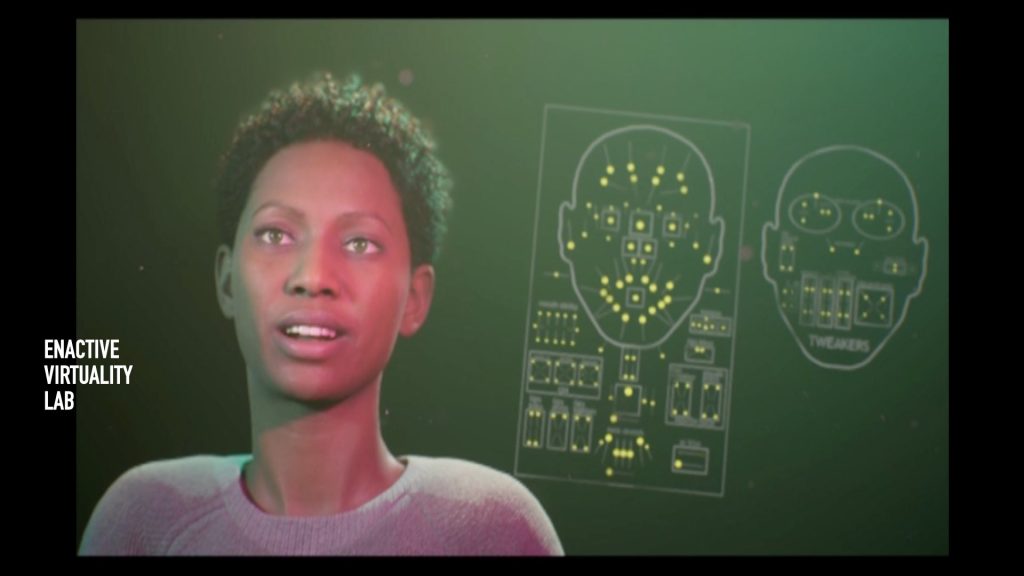

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Ats Kurvet is a 3D real-time graphics and virtual reality application developer and consultant with over 8 years of industry experience. He specialises in lighting, character development and animation, game and user experience design, 3D modeling and environment development, shaders and material development and tech art. He has worked for Crytek GmbH as a lighting artist and runs ExteriorBox OÜ. His focus working with the Enactive Virtuality Lab in researching the visual aspects of digital human development and implementation.

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

Matias Harju is a sound designer and musician specialising in immersive and interactive sonic storytelling. He is currently developing Audio Augmented Reality (AAR) as a narrative and interactive medium at WHS Theatre Union in Helsinki. He is also active in making adaptive music and immersive sound design for other projects including VR, AR, video games, installations, and live performances. Matias is a Master of Music from Sibelius Academy with a background in multiple musical genres, music education, and audio technology. He also holds a master’s degree from the Sound in New Media programme at Aalto University

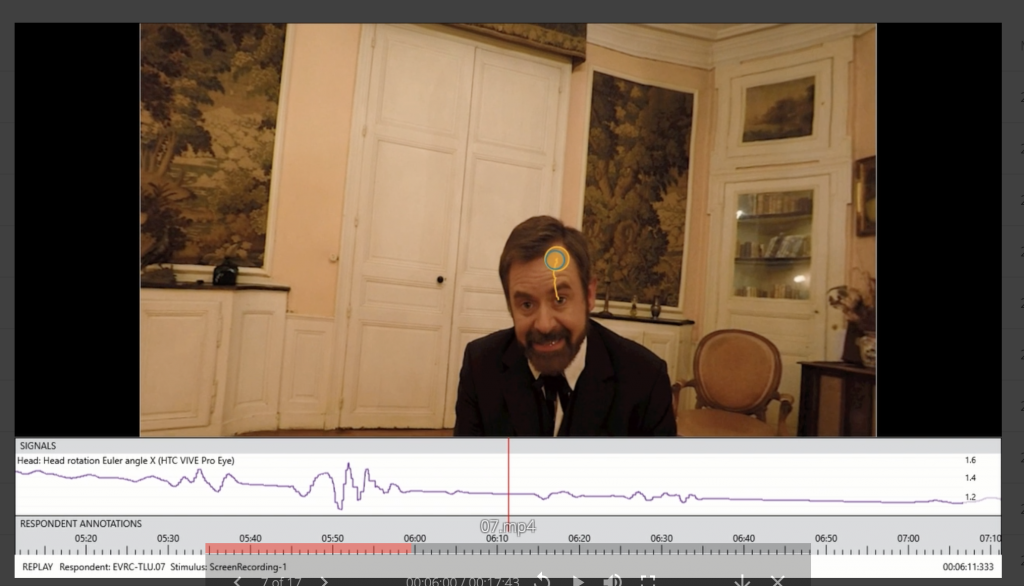

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”

Marie-Laure Cazin is a French filmmaker and a researcher. She is teaching in Ecole Supérieure d’Art et de Design-TALM in France. She was a postdoctoral researcher at MEDIT BFM, Tallinn University with a European post doctoral grant Mobilitas + in 2022-23. She received a PhD in Aix-Marseille University for her thesis entitled “Cinema et Neurosciences, du Cinéma Émotif à Emotive VR”

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,

Robert McNamara has a background in American criminal law as well as degrees related to audiovisual ethnography and eastern classics. He is from New York State, but has lived in Tallinn for the last five years. Currently, he specifically focuses on researching related to ethical, socio-political, anthropological, and legal aspects in the context of employing human-like VR avatars and related XR technology. He is co-authoring journal papers in collaboration with other experts on the team related to the social and legal issues surrounding the use of artificial intelligence, machine learning, and virtual reality avatars for governmental immigration regimes. During 05-09/2020 Robert G. McNamara worked as a visiting research fellow working with the MOBTT90 team. 10/2020 onwards he continues working with the project as a doctoral student. In the Enactive Virtuality Lab Robert has contributed to co-authored writing; the most recent paper accepted for publication is entitled “Well-founded Fear of Algorithms or Algorithms of Well-founded Fear? Hybrid Intelligence in Automated Asylum Seeker Interviews”, Journal of Refugee Studies, Oxford UP,