TRISOLDE – Neuroadaptive Gesamtkunstwerk: The Biocybernetic Symbiosis of Tristan and Isolde”

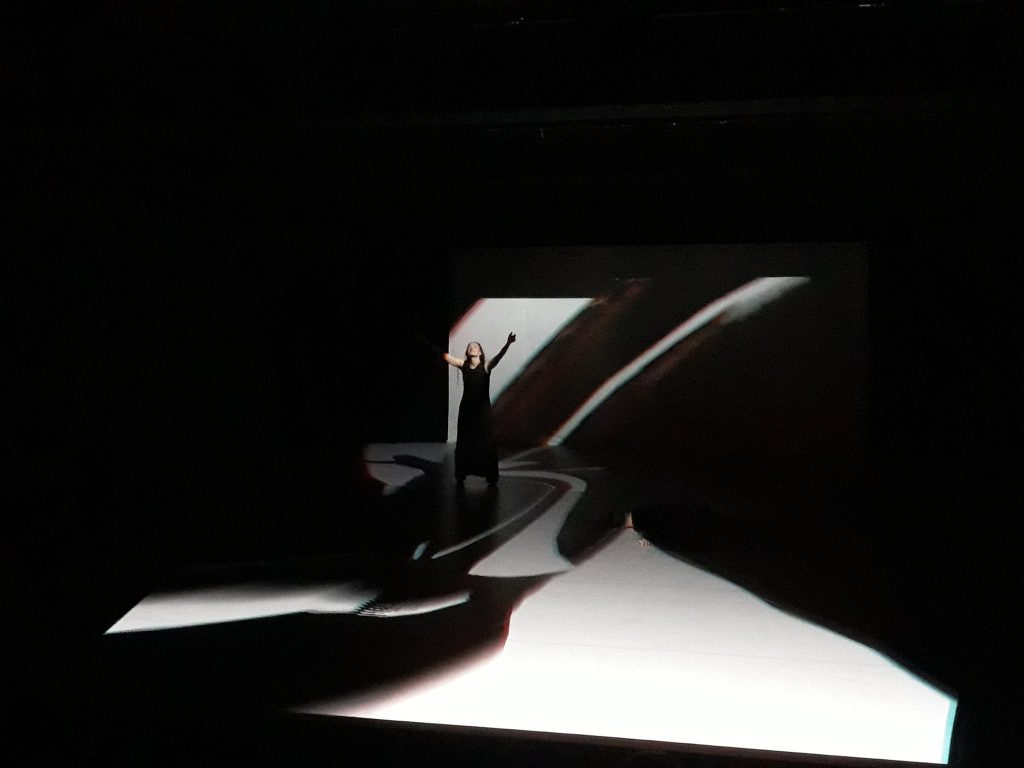

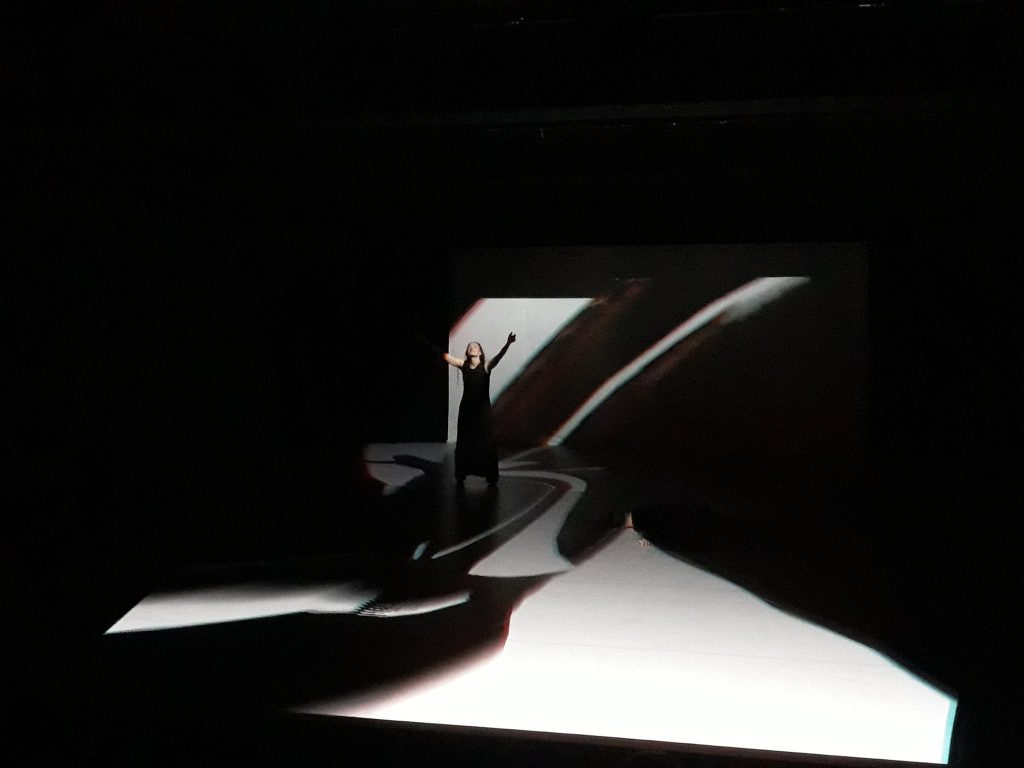

Exploring the final frontier of human-computer interaction with a neuroadaptive opera…performed by the audience, dancers and computational creativity .

Team of “TRISOLDE” (Tiina Ollesk, Simo Kruusement, Renee Nõmmik, Ilkka Kosunen, Hans-Günther Lock, Giovanni Albini) performed in Festival “IndepenDance” in Göteborg, nov 29 and Dec 2, 2019.

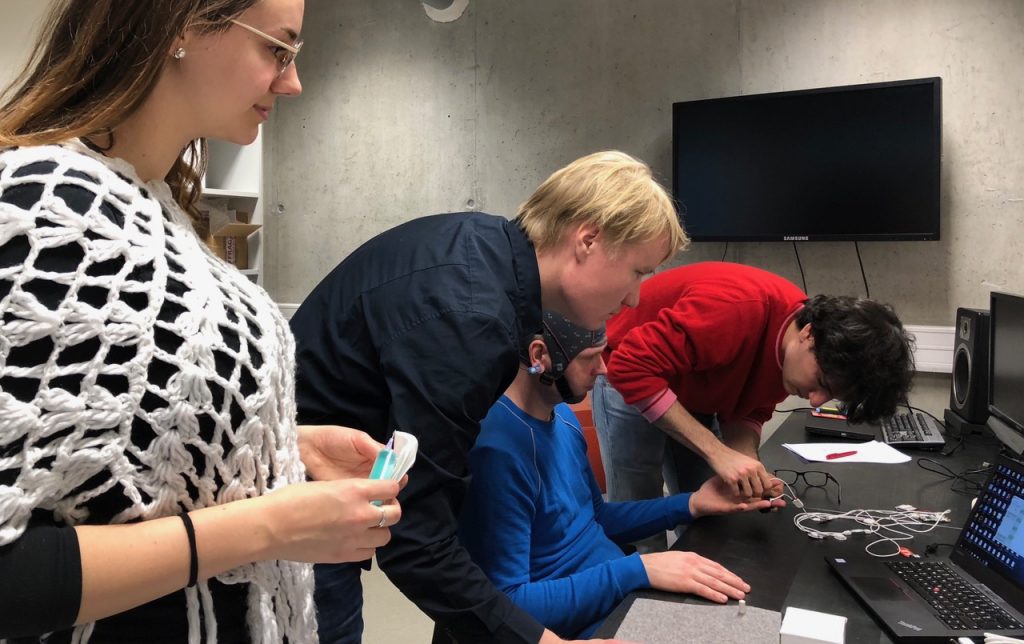

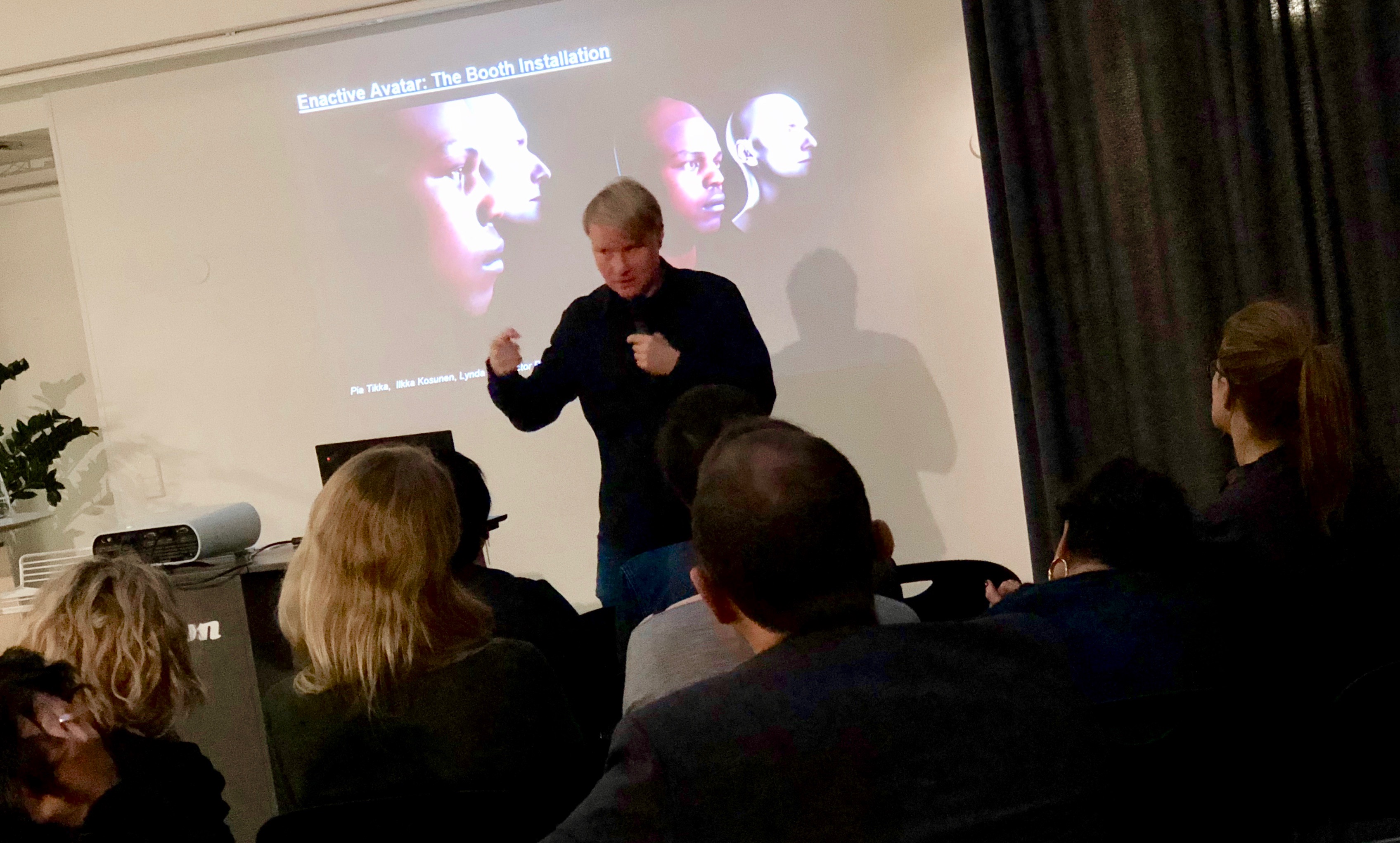

A symbiotic dance version of Wagner´s “Tristan and Isolde” where dancers are controlling the music via body movements and implicit psychophysiological signals. This work explores the next step in this coming-together of man and machine: the symbiotic interaction paradigm where the computer can automatically sense the cognitive and affective state of the user and adapt appropriately in real-time. It brings together many exciting fields of research from computational creativity to physiological computing. To measure audience and to use the audience’s reactions to module the orchestra is new way of doing “participatory theatre” where audience becomes part of the performance.

“Tristan and Isolde” is widely considered both as one of the greatest operas of all time as well as beginning of modernism in music, introducing techniques such as chromaticism dissonance and even atonality. It has sometimes been described as a “symphony with words”; the opera lacks major stage action, large choruses or wide range of characters. Most of the “action” in the opera happens inside the heads of Tristan and Isolde. This provides amazing possibilities for a biocybernetic system: I this case, Tristan and Isolde will communicate both explicitly (through movement of the dancers) but also implicitly via the measured psychophysiological signals.

Dance artists: Tiina Ollesk, Simo Kruusement

Choreographer-director: Renee Nõmmik

Dramaturgy and science of biocybernetic symbiosis: Ilkka Kosunen

Composers for interactive audio media: Giovanni Albini, Hans-Gunter Lock

Video interaction: Valentin Siltsenko

Duration: 40’

This performance is supported by: The Cultural Endowment of Estonia, and Enactive Virtuality Lab and Digital Technology Insitute (biosensors), Tallinn University.

Presentation of project: November 29th-30th and December 1st, 2018 at 3:e Våningen Göteborg (Sweden) at festival Independance. The event is dedicated to the centenary of the Republic of Estonia and supported by program “Estonia100-EV100”.

PREMIER IN TALLINN FEBRUARY 2019 (see more Fine 5 Theater)

A young girl Nora stares shocked at her mother Anu

A young girl Nora stares shocked at her mother Anu