Image: Dr Johannes Riis opens the plenary session for honouring the academic career and contribution of professor Torben Grodal at the SCSMI, chaired by Dr. Stephen Prince (right corner). 5 invited speakers included in addition Ed Tan, Mette Kramer, Pia Tikka, and David Bordwell on the June 13th.

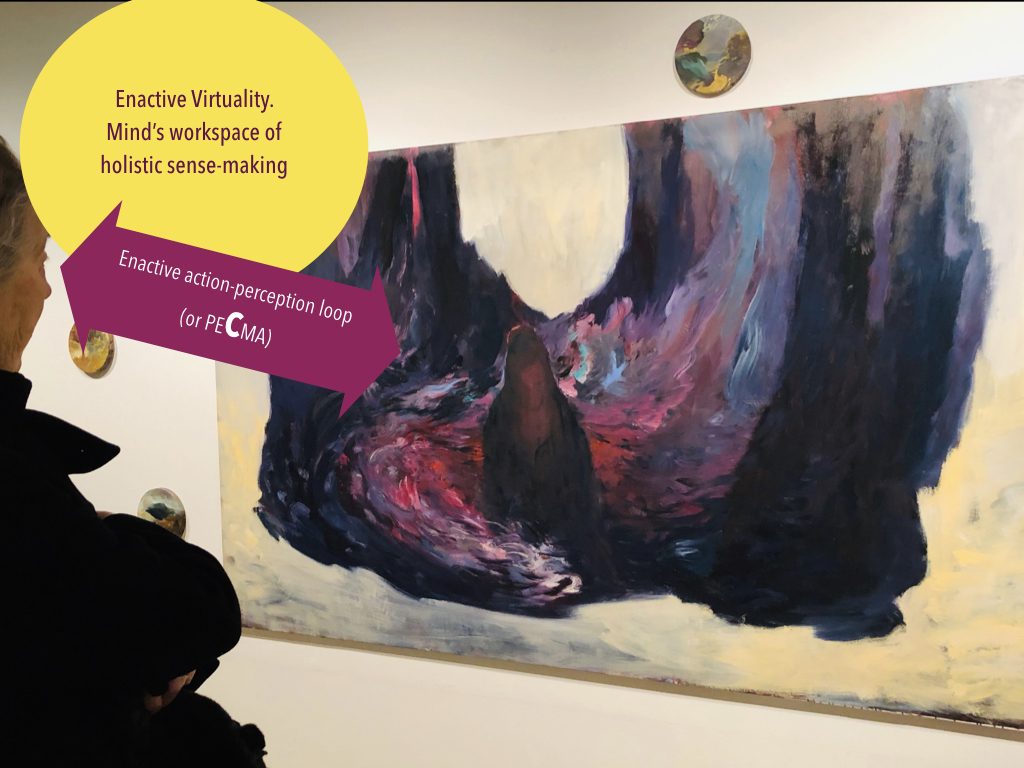

Conference presentation Pia Tikka: Enactive Virtuality: Modelling triadic epistemology of narrative co-presence

The presentation discusses the concept of enactive virtuality in terms of a triadic epistemology, in which holistic understanding is accumulated via reflecting subjective experience against its psychophysiological epiphenomena and varying narrative contexts. Film narrative can evoke strong emotional identification with the screen character, however, in a context-dependent manner. The aim is to deepen the holistic understanding of cinematic narrative in particular as simulated person-to-person encounter. Two concretising case studies of person-to-person encounter are discussed. The first setting applies conventional film as a model of life situations, while another dramatic setting assigns the viewer an enactive role when engaging with an artificial screen character. These settings are compared and analysed in terms of the mentioned triadic epistemology.