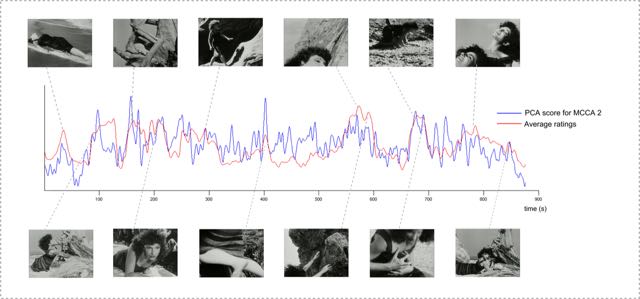

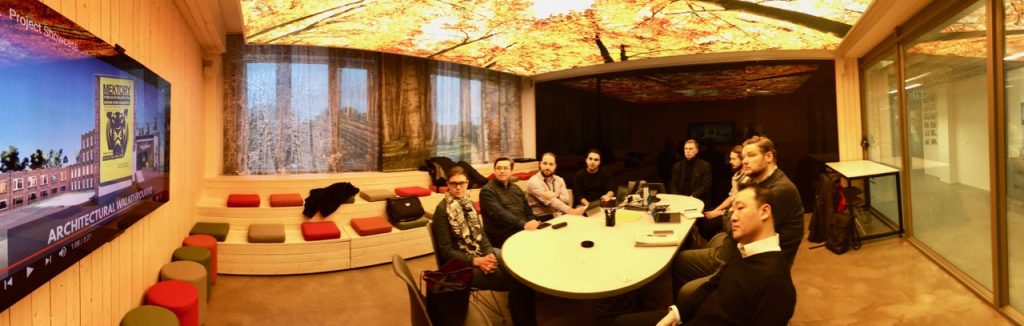

In collaboration with BeAnotherLab (The Machine to Be Another), Lynda Joy Gerry taught a workshop, “Embodying Creative Expertise in Virtual Reality” to Masters in Interaction Design students at Zürcher Hochschule der Künste (ZhDK), as part of a course, “Ecological perception, embodiment, and behavioral change in immersive design” led by BAL members.

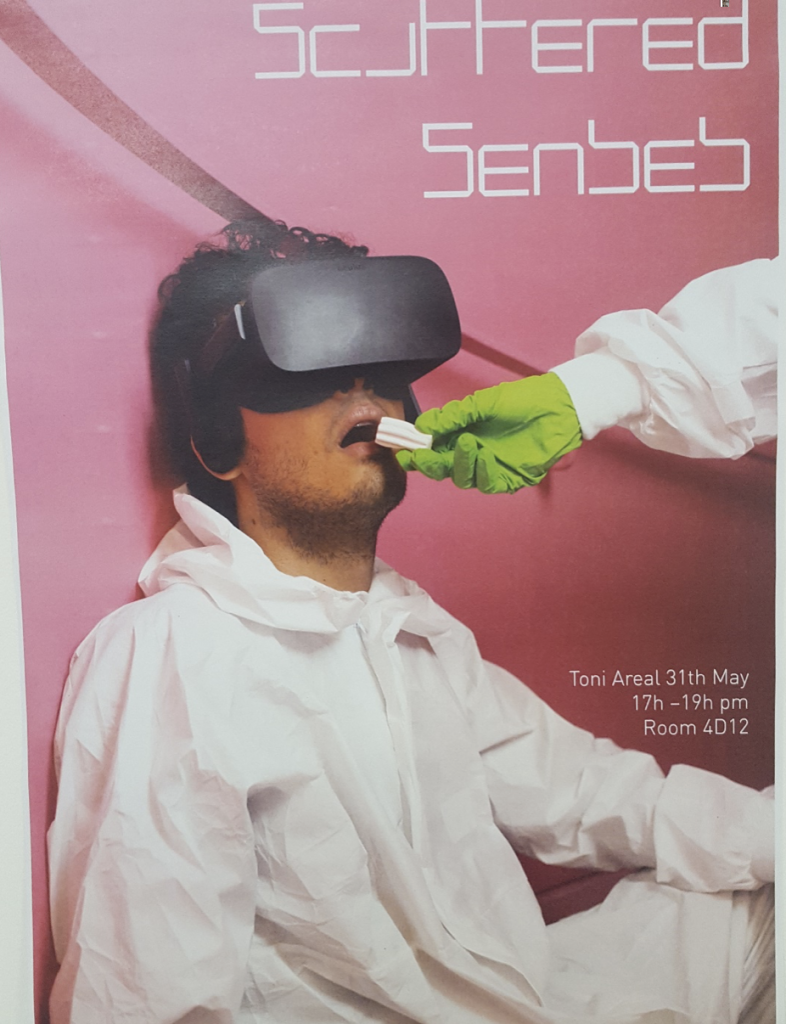

Image: Poster for the students’ final project presentation and exhibition.

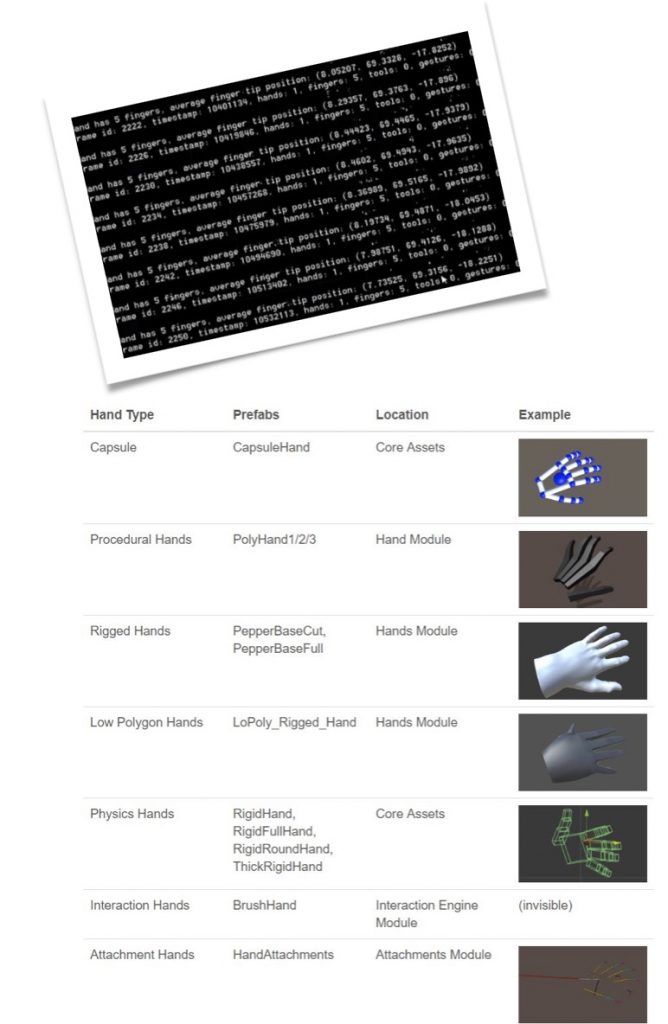

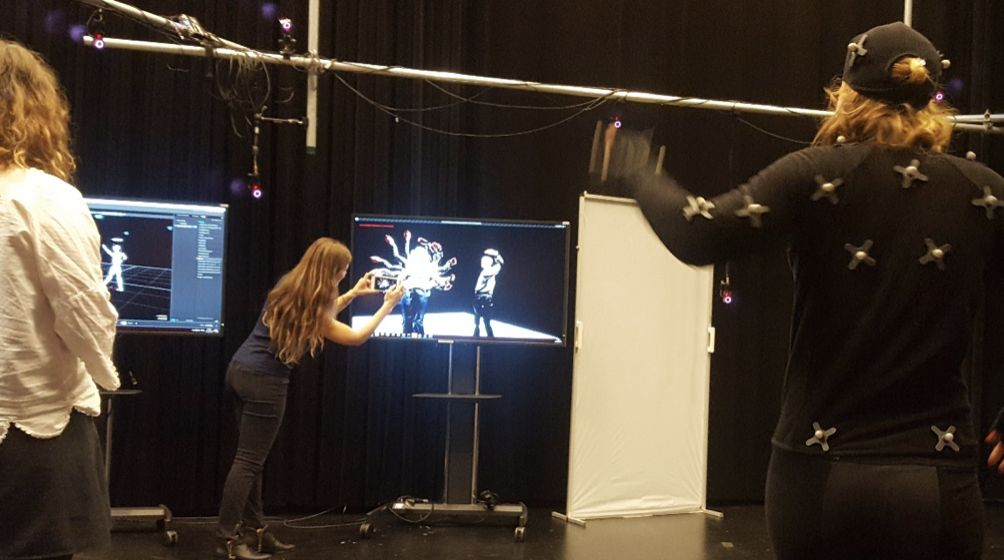

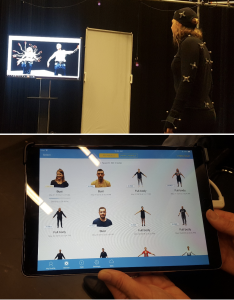

Lynda specifically taught students design approaches using a semi-transparent video overlay of another person’s first-person, embodied experience, as in First-Person Squared. The focus of the workshop was on Leap Motion data tracking and measurements, specifically how to calculate compatibility and interpersonal motor coordination through a match score between the two participants, and how to send this data over a network. The system provides motor feedback regarding imitative gestures that are similar in form and position, and also for gestures that occur synchronously (at the same time), ideally trying to support both types of interpersonal motor coordination. Lynda taught students the equations used and data input necessary to calculate this algorithm for the different match scores, and also how to add interaction effects to this data. Lynda showed students how to implement Leap motion hand tracking on top of stereoscopic point-of-view video and how to record user hand movements. On the 31st, students premiered their final projects at an event entitled “Scattered Senses.”

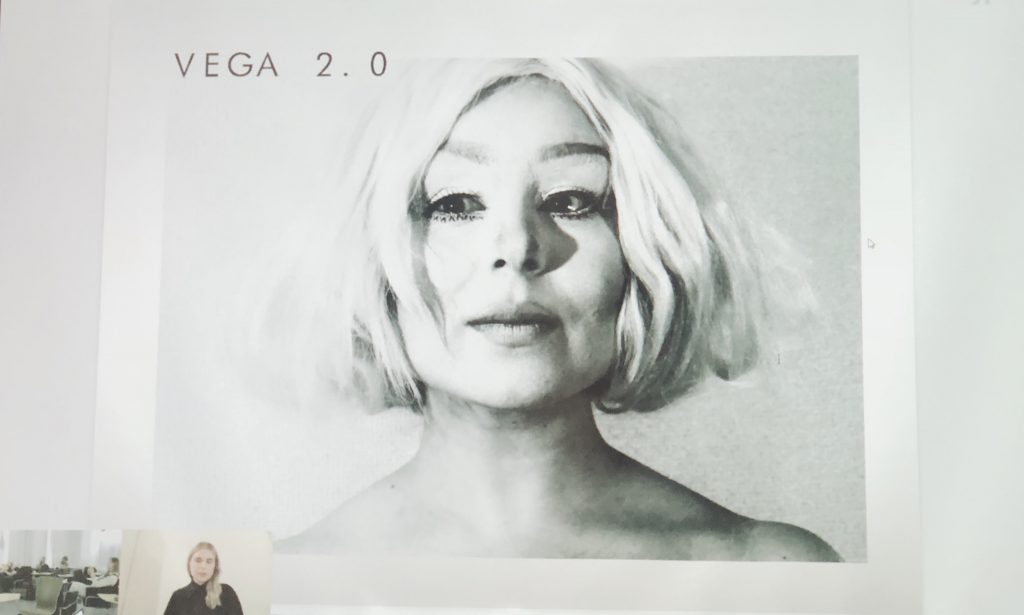

Testing facial expressions of the viewer driving the behavior of a screen character with the Louise’s Digital Double (under a Creative Commons Attribution Non-Commercial No Derivatives 4.0 license). see

Testing facial expressions of the viewer driving the behavior of a screen character with the Louise’s Digital Double (under a Creative Commons Attribution Non-Commercial No Derivatives 4.0 license). see  In the image Lynda Joy Gerry, Dr. Ilkka Kosunen and Turcu Gabriel, Erasmus exchange student from the

In the image Lynda Joy Gerry, Dr. Ilkka Kosunen and Turcu Gabriel, Erasmus exchange student from the

See full program

See full program