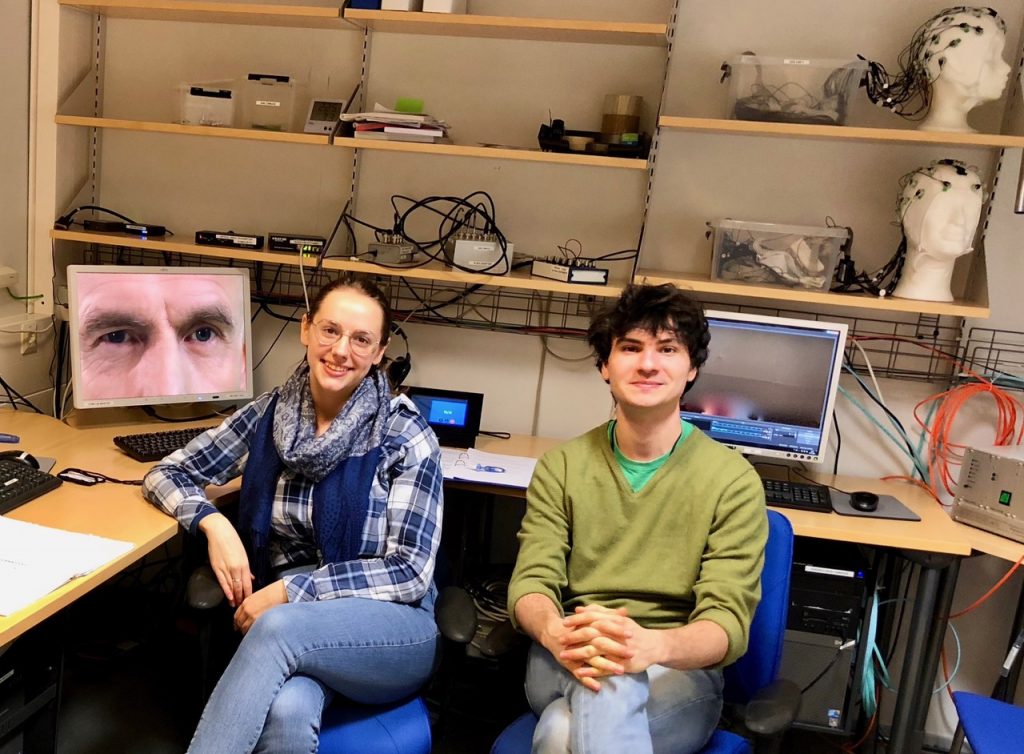

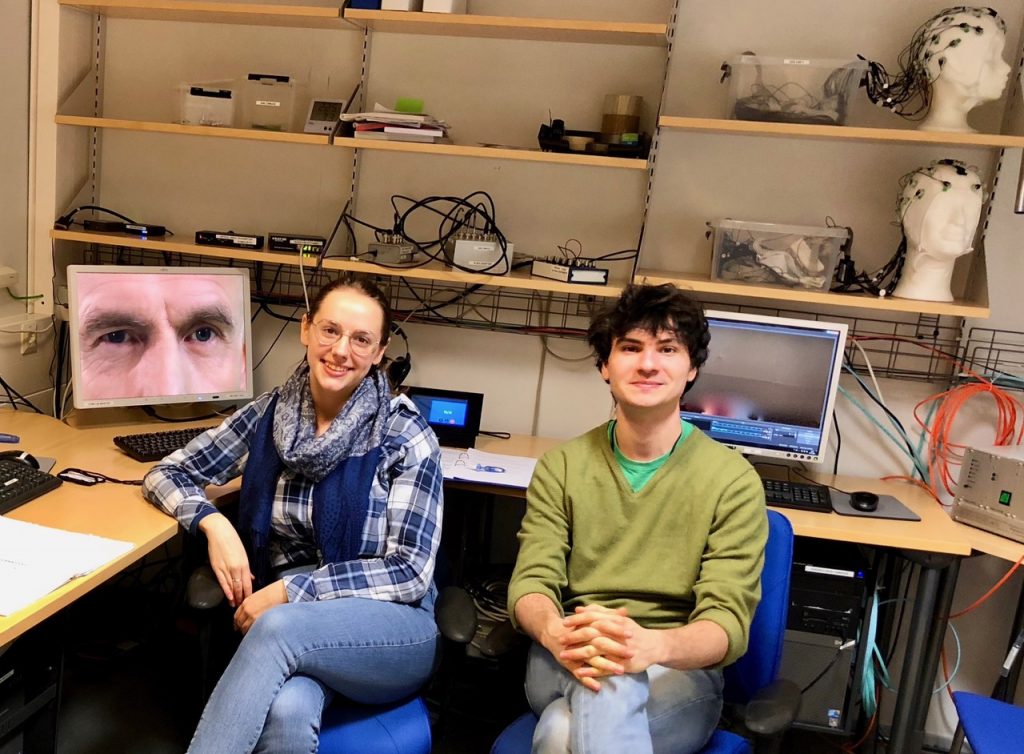

Enactive Virtuality Lab is collaborating with the Brain and Mind Lab of the Aalto School of Science, studying how narrative priming affects the viewer’s narrative story construction. Study on-going.

Enactive Virtuality Research Group, Tallinn University

Activities related to narratives, and narrative systems, art-science, academic and popular events, including related industry engagements

Enactive Virtuality Lab is collaborating with the Brain and Mind Lab of the Aalto School of Science, studying how narrative priming affects the viewer’s narrative story construction. Study on-going.

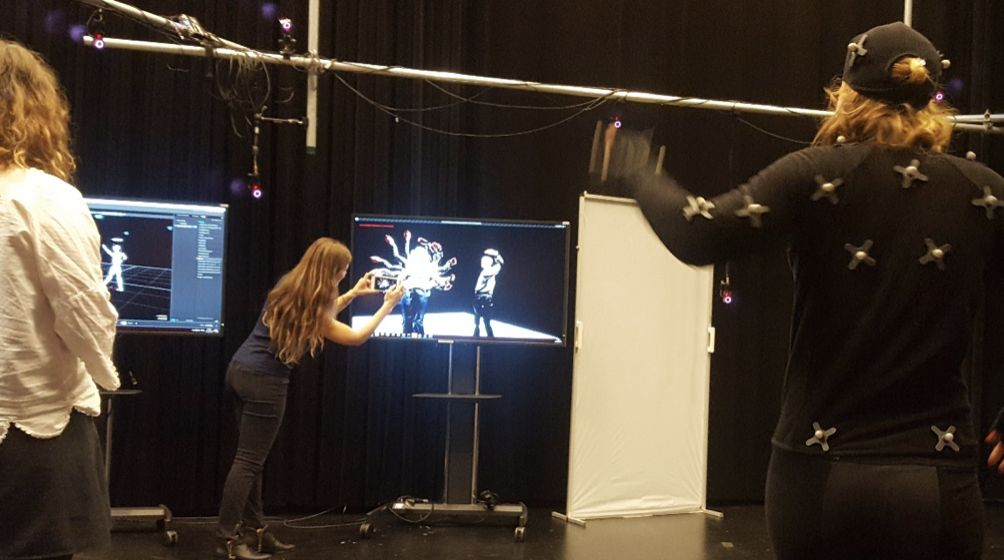

Pia Tikka and Victor Pardinho (video) presenting the latest state of Enactive Avatar pipeline at the meeting of Estonian VR community in Tartu University, Oct 6, 2018.

Interested in PhD studies with the Enactive Virtuality research group, contact piatikka@tlu.ee

Contact for general information on BFM PhD studies: katrin.iisma@tlu.ee

But first things first, see here

https://www.tlu.ee/en/bfm/audiovisual-arts-and-media-studies

A young girl Nora stares shocked at her mother Anu. Anu stands expressionless by the kitchen table and scrapes the left-over spaghetti from Nora’s plate into a plastic bag. She places the plate into the bag and starts putting there other dining dishes, takes a firm hold of the bag and smashes it against the table. Nora is horrified: “Mother! What are you doing?”. Anu continues smashing the bag without paying attention to her daughter. Nora begs her to stop. Anu collapses crying against the table top. Nora approaches, puts her arms around the crying mother and starts slowly caressing her hair.

A young girl Nora stares shocked at her mother Anu. Anu stands expressionless by the kitchen table and scrapes the left-over spaghetti from Nora’s plate into a plastic bag. She places the plate into the bag and starts putting there other dining dishes, takes a firm hold of the bag and smashes it against the table. Nora is horrified: “Mother! What are you doing?”. Anu continues smashing the bag without paying attention to her daughter. Nora begs her to stop. Anu collapses crying against the table top. Nora approaches, puts her arms around the crying mother and starts slowly caressing her hair.The dramatic scene describes a daughter witnessing a nervous breakdown of her mother. Its narrative content remains the same should one read it in a textual form or viewed it as a movie. It is relatively well known how narratives are processed in the distinct human sensory cortices depending on the sensory input through which the narrative is perceived (reading, listening, viewing; [1–5]). However, far less is known of how the human brain processes meaningful narrative content independent of the media of presentation. To tackle this classical dichotomy issue between form and content in neuroimaging terms, we employed functional magnetic resonance imaging (fMRI) to provide new insights into brain networks relating to a particular narrative content while overlooking its form.

In the image Nora (actress Rosa Salomaa); director Saara Cantell, cinematography Marita Hällfors (F.S.C), producer Outi Rousu, Pystymetsä Oy, 2010.

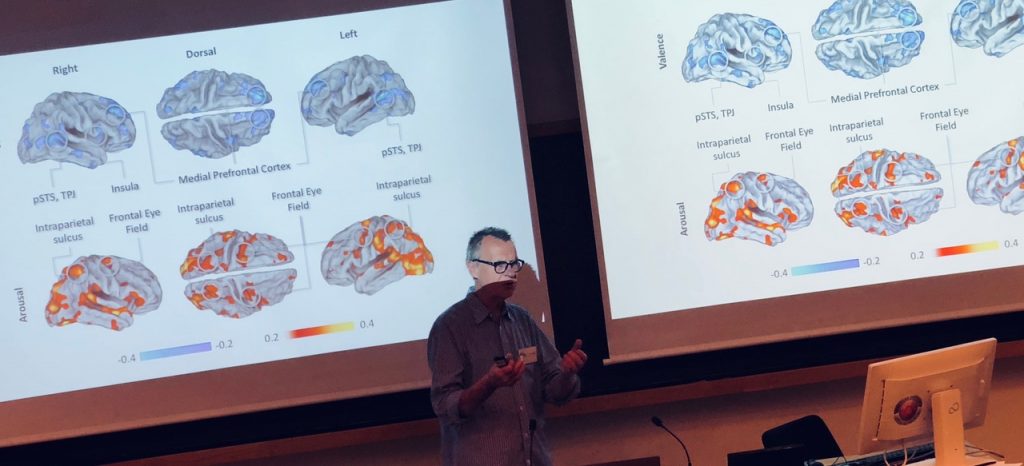

Narratives surround us in our everyday life in different forms. In the sensory brain areas, the processing of narratives is dependent on the media of presentation, be that in audiovisual or written form. However, little is known of the brain areas that process complex narrative content mediated by various forms. To isolate these regions, we looked for the functional networks reacting in a similar manner to the same narrative content despite different media of presentation. We collected 3-T fMRI whole brain data from 31 healthy human adults during two separate runs when they were either viewing a movie or reading its screenplay text. The independent component analysis (ICA) was used to separate 40 components. By correlating the components’ time-courses between the two different media conditions, we could isolate 5 functional networks that particularly related to the same narrative content. These TOP-5 components with the highest correlation covered fronto-temporal, parietal, and occipital areas with no major involvement of primary visual or auditory cortices. Interestingly, the top-ranked network with highest modality-invariance also correlated negatively with the dialogue predictor, thus pinpointing that narrative comprehension entails processes that are not language-reliant. In summary, our novel experiment design provided new insight into narrative comprehension networks across modalities.

In this article Deren’s film At Land is analyzed as an expression of a human body-brain system situated and enactive within the world, with references to neuroscience, neurocinematic studies, and screendance.

TIKKA, Pia. Screendance as enactment in Maya Deren’s At Land. DATJournal Design Art and Technology, [S.l.], v. 3, n. 1, p. 9-28, june 2018. ISSN 2526-1789. First published in The Oxford Handbook of Screendance Studies Edited by Douglas Rosenberg,Aug 2016. Available online here

As part of the Frontiers du Vivant, Lynda Joy Gerry presented and defended her project “Compassion Cultivation Training in Bio-Adaptive Virtual Environments” at the Centre for Research and Interdisciplinarity (CRI) in Paris. This project involves using perspective-taking in virtual environments using biofeedback relating to emotion regulation (heart rate variability) to manage the recovery from empathic distress. Empathic distress is conceived as a step in the empathic process towards the understanding of another person’s affective, bodily, and psychological state, but one that can lead to withdrawal and personal distress for the empathizer. Thus, this project implements instruction techniques adopted from Compassion Cultivation Training guided meditation practices cued by biofeedback to entrain better self-other boundaries and distinctions, as well as emotion regulation.

Lynda also participated in a workshop on VR and Empathy led by Philippe Bertrand from BeAnother Lab (BAL, The Machine to Be Another). See Philippe Bertrand TEDx talk “Standing in the shoes of the others with VR”

Hybrid Labs Symposium

The Third Renewable Futures Conference

May 30 – June 1, 2018, Aalto University, Otaniemi Campus

Hybrid Labs is the third edition of Renewable Futures conference that aims to challenge the future of knowledge creation through art and science. The HYBRID LABS took place from May 30 to June 1, 2018 at Aalto University in Espoo, Finland, in the context of Aalto Festival. Celebrating 50 years of Leonardo journal and community, the HYBRID LABS conference looked back into the history of art and science collaboration, with an intent to reconsider and envision the future of hybrid laboratories – where scientific research and artistic practice meet and interact.

http://hybridlabs.aalto.fi/hls2018-symposium/

Pia Tikka and Mauri Kaipainen:

Triadic epistemology of narrative experience

We consider the narrative experience as a triangular system of relations between narrative structure , narrative perspective , and physiological manifestations associated with both. The proposal builds on the fundamentally pragmatist idea that no two of these elements are enough to explain each other, but a third is always required to explicate the interpretative angle. Phenomenological accounts altogether reject the idea of objective descriptions of experience. At the same time, a holistic understanding must assume that a narrative is shared on some level, an assumption narratology must make, and that even individual experiences are also embodied, as is evident to neuroscientists observing brain activity evoked by narrative experience. It cannot be that these accounts are incompatible forever. Using these elements, we discuss a triadic epistemology, a mutually complementary knowledge construction system combining phenomenological, narratological and physiological angles in order to generate integrated knowledge about how different people experience particular narratives.

Our approach assumes a holistic, or even deeper, an enactive perspective to experiencing, that is, assuming systemic engagement in the embodied, social, and situational environmental processes. Consequently, we propose understanding narrative content needs to be analyzed not only based on subjective reports of theexperiencer, but they also need to be related to neurophysiological manifestations of the experience. Or, describing the associated neural activity during the viewing of a film is not enough to relate it to subjective reports of the viewers, but the observations also need to be interpreted to conventions of storytelling. A selection of cases are described to clarify the proposed triadic method.

Κeywords: neurophenomenology, narrative experience, narrative perspective, enactive theory of mind, epistemology

eFilm: Hyperfilms for basic and clinical research presented by

my aivoAALTO collaborator professor Mikko Sams showed highlights of neuroscience findings related to viewing films in fMRI and introduced the concept of eFilm, a novel computational platform for producing and easily modifying films to be used in basic and clinical research.

VR Research Talks organised with Virtual Cinema Lab and FiVR Track dedicated on research in and around VR, with a focus on artistic praxes around sound, alternative narrations and the self.

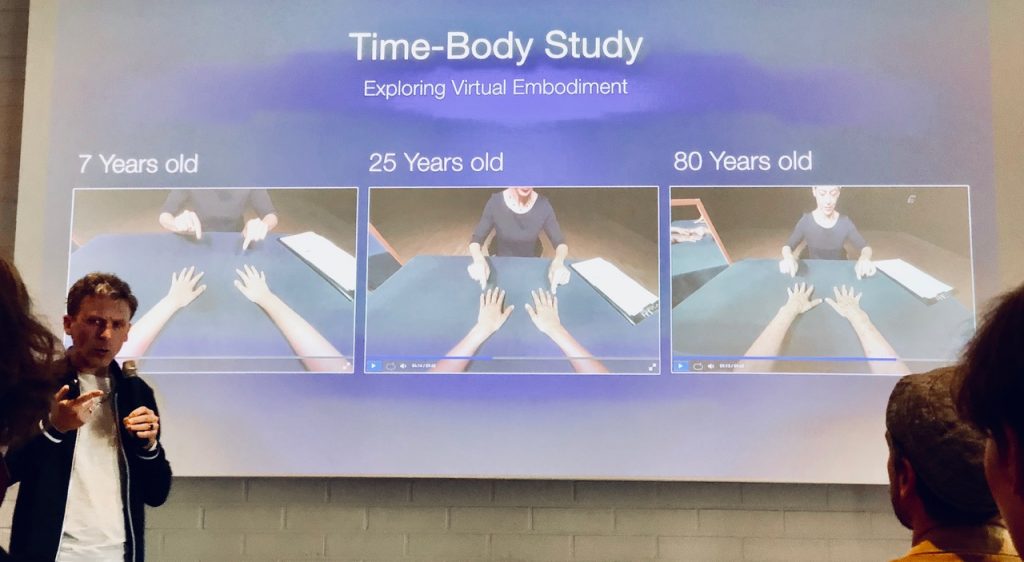

Daniel Landau: Meeting Yourself in Virtual Reality and Self-Compassion

Self-reflection is the capacity of humans to exercise introspection and the willingness to learn more about their fundamental nature, purpose, and essence. Between the internal process of Self-reflection to the external observation of one’s reflection – runs a thin line marking the relationship between the private-self and the public-self. From Narcissus’s pond, through reflective surfaces and mirrors, to current day selfies, the concepts of self, body-image and self-awareness have been strongly influenced by the human interaction with physical reflections. In fact, one can say that the evolution of technologies reproducing images of ourselves has played a major role in the evolution of the Self as a construct. With the current wave of Virtual-Reality (VR) technology making its early steps as a consumer product, we set out to explore the new ways in which VR technology may impact our concept of self and self-awareness. ‘Self Study’ aims to critically explore VR as a significant and novel component in the history and tradition of the complex relationship between technology and the Self (—).

See more on Daniel’s work here)

On March 30th, Lynda Joy Gerry visited the Innovation Lab at Zürcher Hochschule der Künste (ZhDK) for a workshop entitled “Mimic Yourself.”

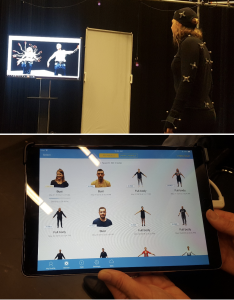

This workshop involved collaborations between psychologists, motion-tracking and capture experts, and theater performers. The performers wore the Perception Neuron motion capture suit within an opti-track system. The data from the performer’s motion was tracked onto virtual avatars in real-time. Specifically, the team had used the Structure Sensor depth-field camera to create photogrammetry scans of members of the lab. These scans were then used as the avatar “characters” put into the virtual environment to have the mocap actors’ movements tracked onto. A screen was also programmed into the Unity environment, such that the screen could move around the real world in different angles and three-dimensional planes and show different views and perspectives of the virtual avatar being tracked relative to the human actor’s movements. Two actors playfully danced and moved about while impacting virtual effects with their tracked motion – specifically, animating virtual avatars but also cueing different sound effects and experiences.

Image above: Motion capture body suit worn by human actor and tracked onto a virtual avatar. Multiple avatar “snap shots” can be taken to create visual effects and pictures. Images below: Creating a many-arm shakti pose with avatar screen captures created through mocap.

Image above shows examples of photogrammetry scans taken with the Structure Sensor.

Examination of Johanna Lehto’s MA thesis “Robots and Poetics – Using narrative elements in human-robot interaction” for the Department of Media, programme New Media Design and Production on the 16th of May 2018.

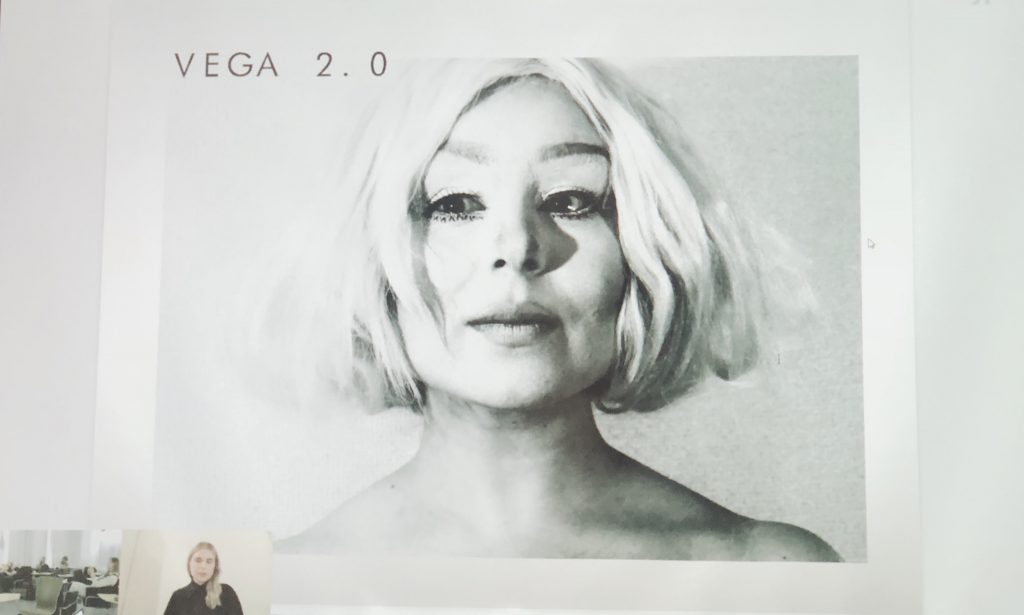

As a writer and a designer, Johanna Lehto sets out to reflect upon the phenomenon of human-robot interaction through her own artistic work. To illustrate plot structure and narrative units of the interaction between a robot and human, she reflects upon how Aristotle’s dramatic principles. In her work, she applies Aristotelian drama structure to analyse a human-robot encounter as a dramatic event. Johanna made an interactive video installation in which she created a presentation of an AI character, Vega 2.0 (image). The installation was exhibited in Tokyo in Hakoniwa-exhibition on 22.-24.6.2017 and in Musashino Art University Open Campus -festival 10.-11.6.2017.

News from our international network.

Since connected by Storytek Content+Tech Acceleator in fall 2017 Pia Tikka has consulted the VFC project directed by Charles S. Roy on screenplay and audience interaction.

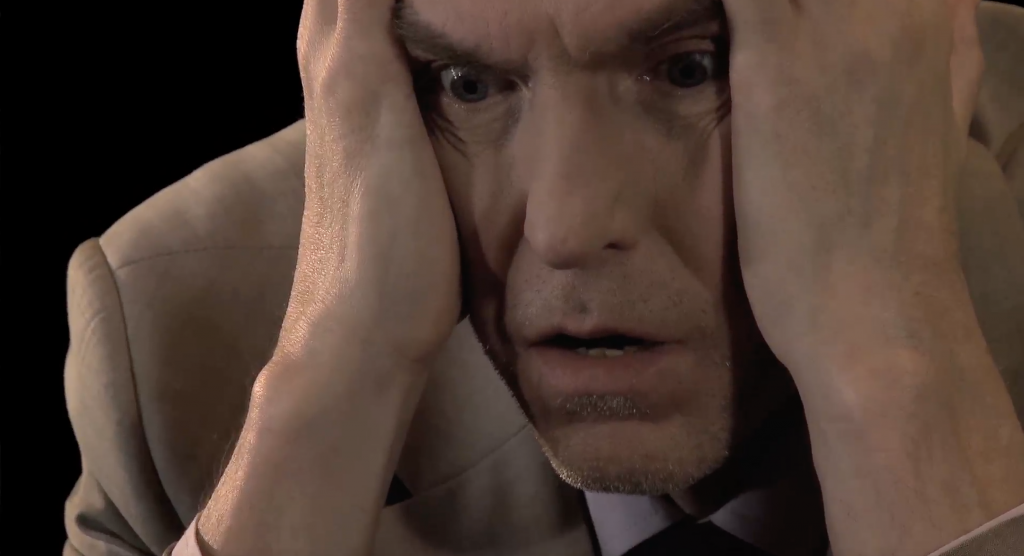

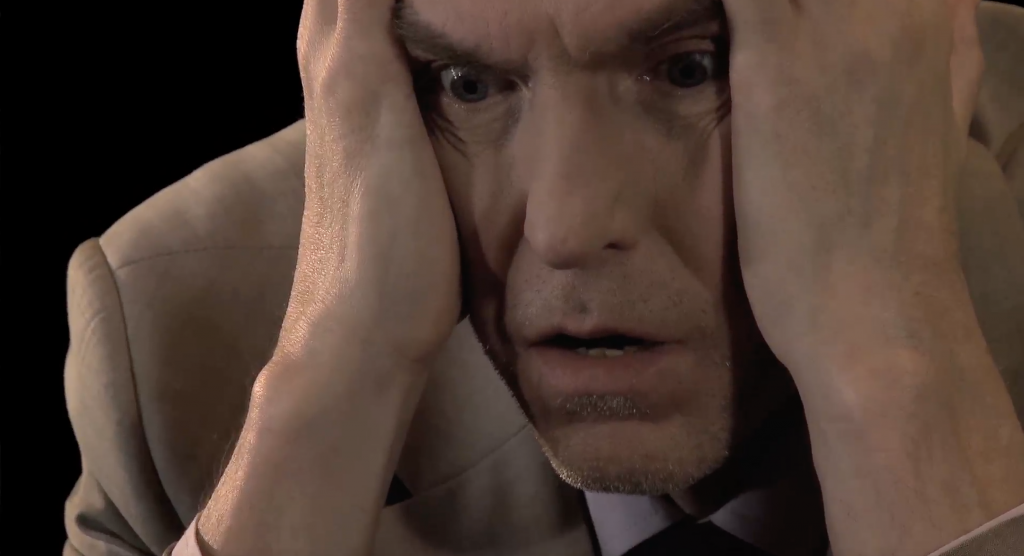

Charles S. Roy, Film Producer & Head of Innovation at the production company La Maison de Prod, develops his debut narrative film+interactive project VFC as producer-director. VFC has been selected at the Storytek Content+Tech Accelerator, the Frontières Coproduction Market, the Cannes NEXT Cinema & Transmedia Pitch, the Sheffield Crossover Market, and Cross Video Days in Paris. In the vein of classic portrayals of female anxiety such as Roman Polanski’s REPULSION, Todd Haynes’ SAFE and Jonathan Glazer’s BIRTH, VFC is a primal and immersive psychological drama about fear of music (cinando.com). Its main innovation is in bringing brain-computer interface storytelling to the big screen by offering an interactive neurotech experience.

On the premises of the Cannes Film Market, as a grant holder for the Estonian innovation and development incubator Storytek Accelerator, Charles presented his work to the audience of the tech-focused NEXT section (8-13 May).