Hybrid Labs Symposium

The Third Renewable Futures Conference

May 30 – June 1, 2018, Aalto University, Otaniemi Campus

June 31 NeurocinemAtics on narrative experience

Hybrid Labs is the third edition of Renewable Futures conference that aims to challenge the future of knowledge creation through art and science. The HYBRID LABS took place from May 30 to June 1, 2018 at Aalto University in Espoo, Finland, in the context of Aalto Festival. Celebrating 50 years of Leonardo journal and community, the HYBRID LABS conference looked back into the history of art and science collaboration, with an intent to reconsider and envision the future of hybrid laboratories – where scientific research and artistic practice meet and interact.

http://hybridlabs.aalto.fi/hls2018-symposium/

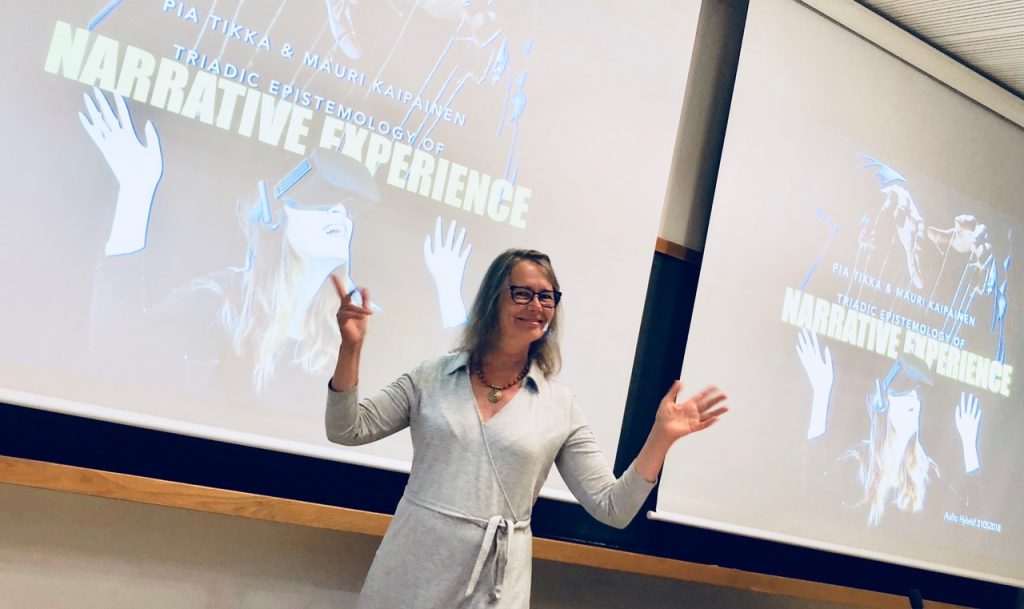

Pia Tikka and Mauri Kaipainen:

Triadic epistemology of narrative experience

We consider the narrative experience as a triangular system of relations between narrative structure , narrative perspective , and physiological manifestations associated with both. The proposal builds on the fundamentally pragmatist idea that no two of these elements are enough to explain each other, but a third is always required to explicate the interpretative angle. Phenomenological accounts altogether reject the idea of objective descriptions of experience. At the same time, a holistic understanding must assume that a narrative is shared on some level, an assumption narratology must make, and that even individual experiences are also embodied, as is evident to neuroscientists observing brain activity evoked by narrative experience. It cannot be that these accounts are incompatible forever. Using these elements, we discuss a triadic epistemology, a mutually complementary knowledge construction system combining phenomenological, narratological and physiological angles in order to generate integrated knowledge about how different people experience particular narratives.

Our approach assumes a holistic, or even deeper, an enactive perspective to experiencing, that is, assuming systemic engagement in the embodied, social, and situational environmental processes. Consequently, we propose understanding narrative content needs to be analyzed not only based on subjective reports of theexperiencer, but they also need to be related to neurophysiological manifestations of the experience. Or, describing the associated neural activity during the viewing of a film is not enough to relate it to subjective reports of the viewers, but the observations also need to be interpreted to conventions of storytelling. A selection of cases are described to clarify the proposed triadic method.

Κeywords: neurophenomenology, narrative experience, narrative perspective, enactive theory of mind, epistemology

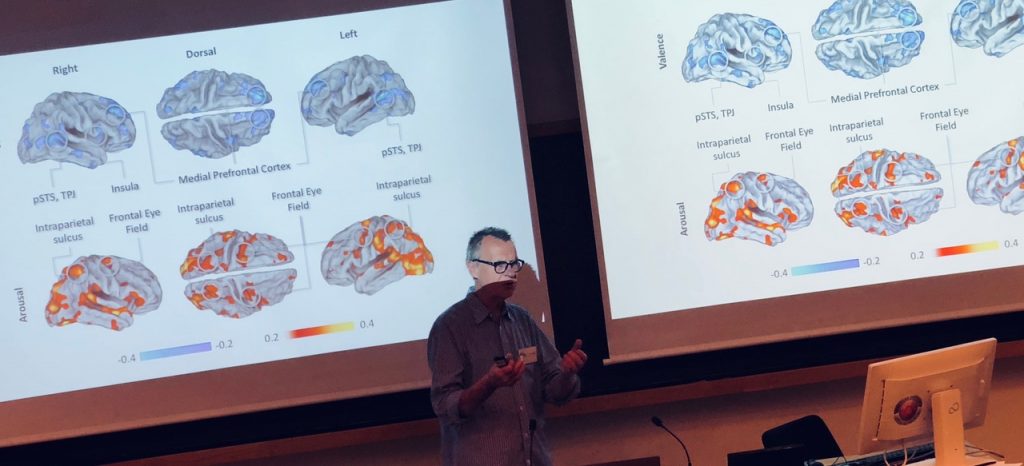

eFilm: Hyperfilms for basic and clinical research presented by

my aivoAALTO collaborator professor Mikko Sams showed highlights of neuroscience findings related to viewing films in fMRI and introduced the concept of eFilm, a novel computational platform for producing and easily modifying films to be used in basic and clinical research.

June 1 VR TALKS at Aalto Studios

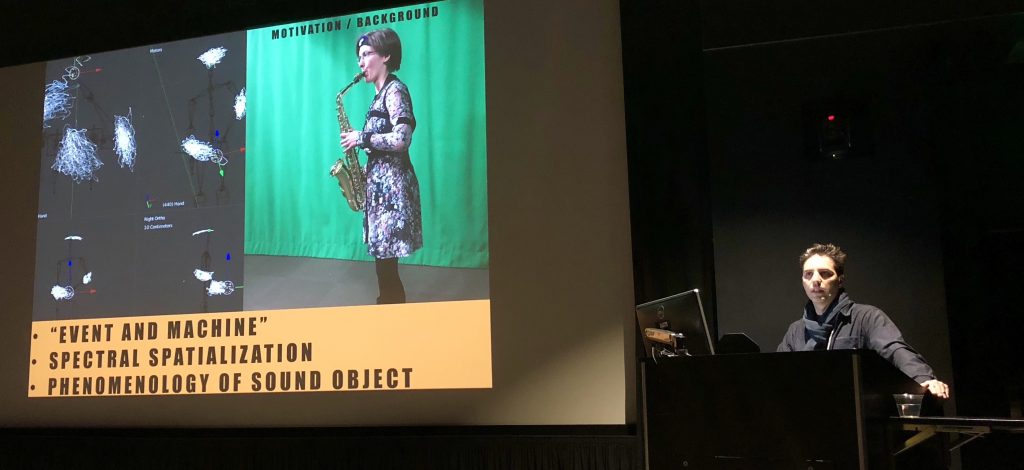

VR Research Talks organised with Virtual Cinema Lab and FiVR Track dedicated on research in and around VR, with a focus on artistic praxes around sound, alternative narrations and the self.

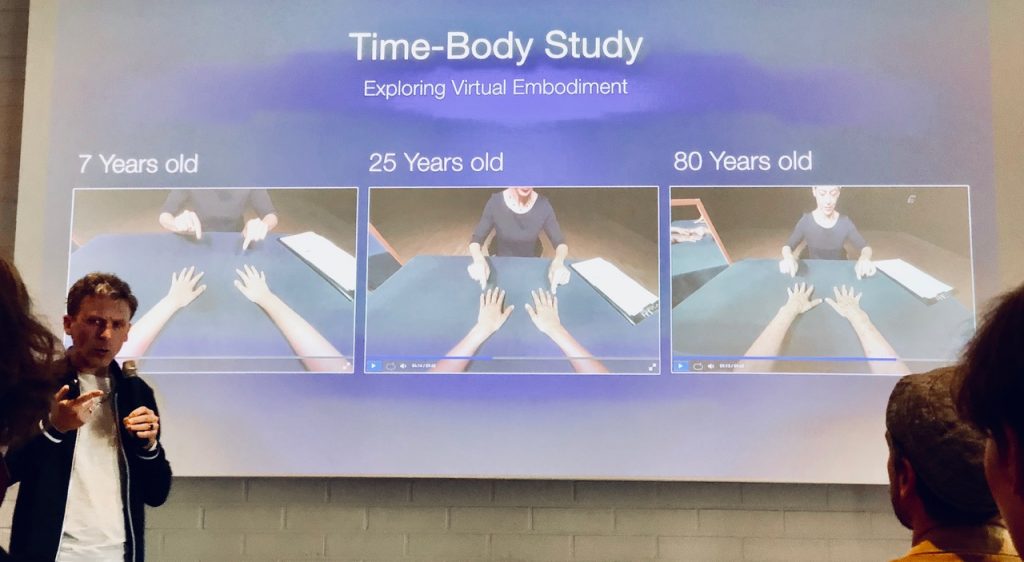

Daniel Landau: Meeting Yourself in Virtual Reality and Self-Compassion

Self-reflection is the capacity of humans to exercise introspection and the willingness to learn more about their fundamental nature, purpose, and essence. Between the internal process of Self-reflection to the external observation of one’s reflection – runs a thin line marking the relationship between the private-self and the public-self. From Narcissus’s pond, through reflective surfaces and mirrors, to current day selfies, the concepts of self, body-image and self-awareness have been strongly influenced by the human interaction with physical reflections. In fact, one can say that the evolution of technologies reproducing images of ourselves has played a major role in the evolution of the Self as a construct. With the current wave of Virtual-Reality (VR) technology making its early steps as a consumer product, we set out to explore the new ways in which VR technology may impact our concept of self and self-awareness. ‘Self Study’ aims to critically explore VR as a significant and novel component in the history and tradition of the complex relationship between technology and the Self (—).

See more on Daniel’s work here)

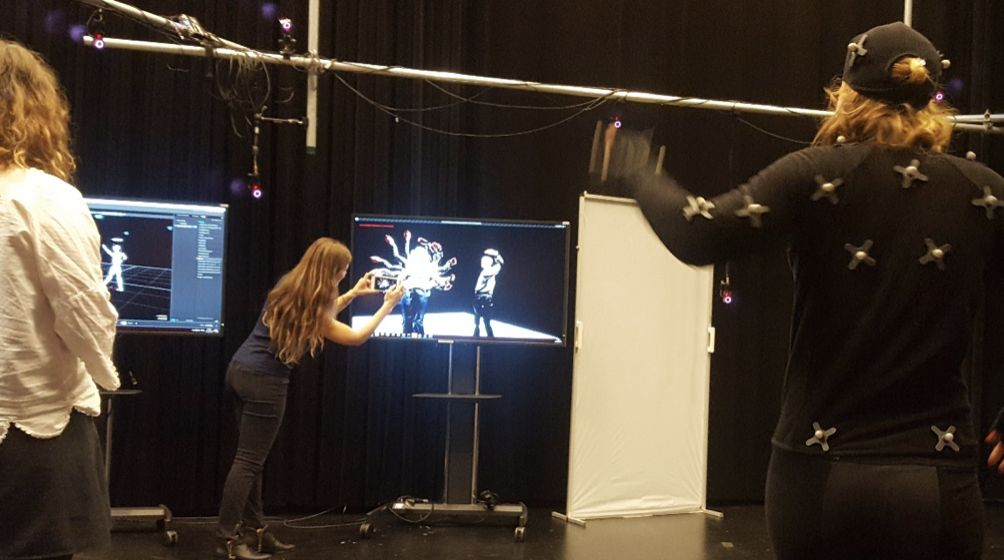

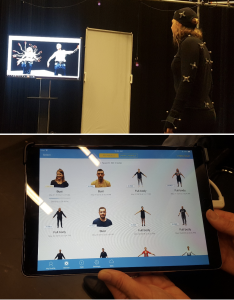

Testing facial expressions of the viewer driving the behavior of a screen character with the Louise’s Digital Double (under a Creative Commons Attribution Non-Commercial No Derivatives 4.0 license). see

Testing facial expressions of the viewer driving the behavior of a screen character with the Louise’s Digital Double (under a Creative Commons Attribution Non-Commercial No Derivatives 4.0 license). see  In the image Lynda Joy Gerry, Dr. Ilkka Kosunen and Turcu Gabriel, Erasmus exchange student from the

In the image Lynda Joy Gerry, Dr. Ilkka Kosunen and Turcu Gabriel, Erasmus exchange student from the

See full program

See full program