http://bit.ly/ux-xr-everyday-vrscifest

How technology can help us to stay connected despite any crises?

How can we create meaningful scientific and artistic projects without being able to be close to each other?

Enactive Virtuality Research Group, Tallinn University

Art-Sci, artistic or scientific public exhibitions, installations, set-ups where artistic aspects of the research are high-lighted

http://bit.ly/ux-xr-everyday-vrscifest

![]()

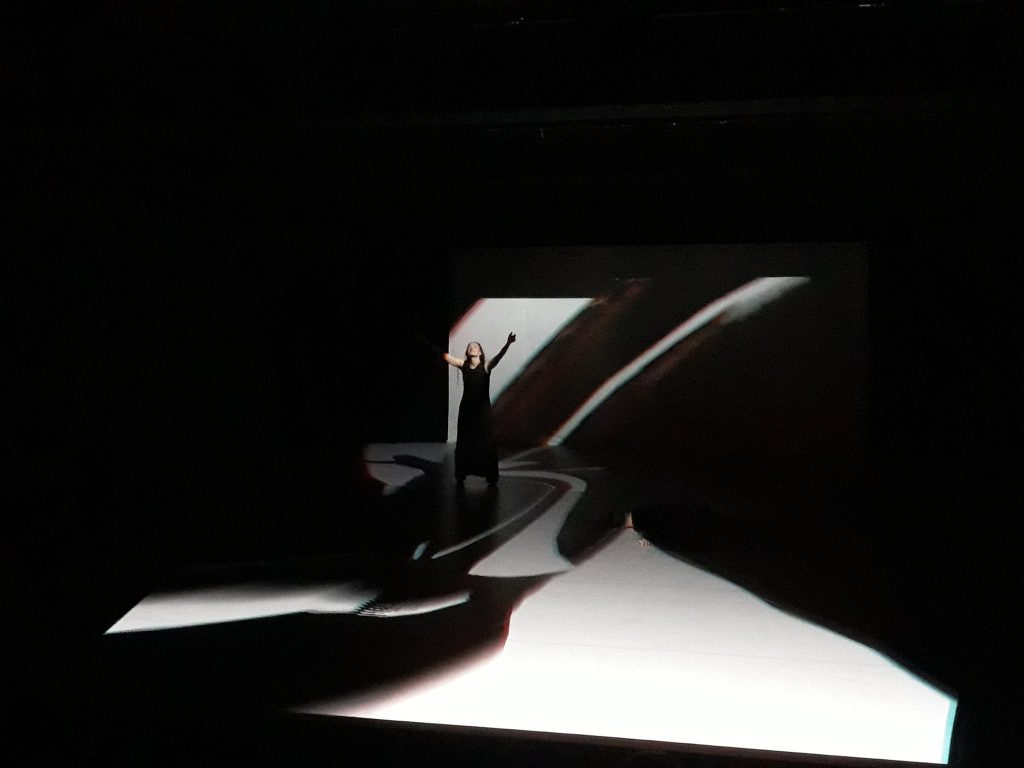

An invited keynote at the two day conference “Actor and Avatar” organised by Professor Anton Rey, IPF, ZHdK August 29th and 30th 2019 at the Toni Areal, Zurich University of the Arts (ZHdK). The “Actor and Avatar” project explores aspects of actor performances particularly aimed to provide facial expressiveness for a virtual character (avatar) and is funded by the Swiss National Science Foundation.

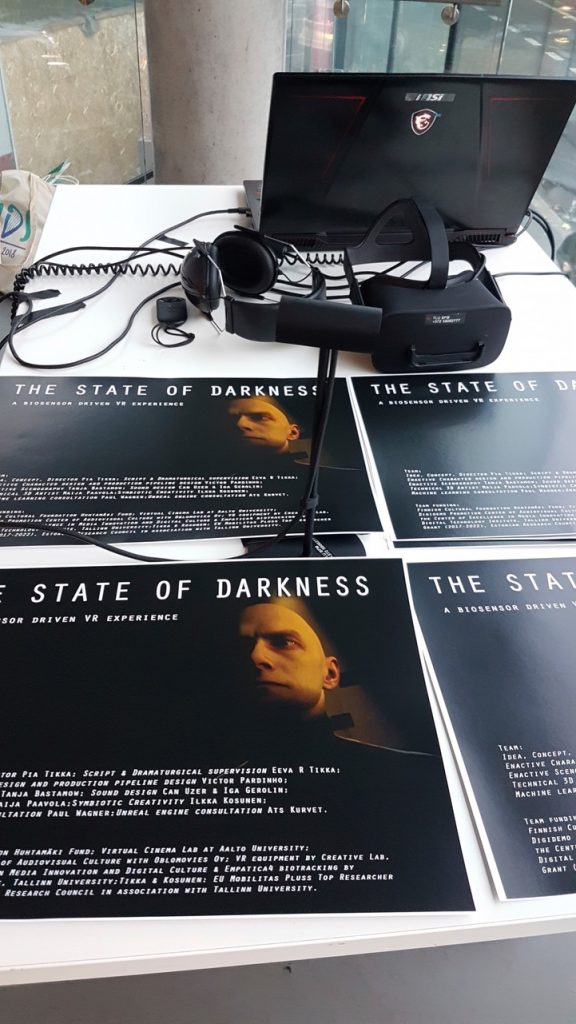

The VR Installation The State of Darkness previously exhibited in the Science Gallery, Dublin (Dec 2018) and in the 360 degrees (Prague 2019) will be presented at the conference. In addition, Enactive Virtuality Lab’s team member Victor Pardinho will run a Master’s Class for ZHdK students and staff.

The keynote by Pia Tikka 29th of August will address a range of topics related to the actors and humanlike virtual characters in the collaborative setting as described under the image.

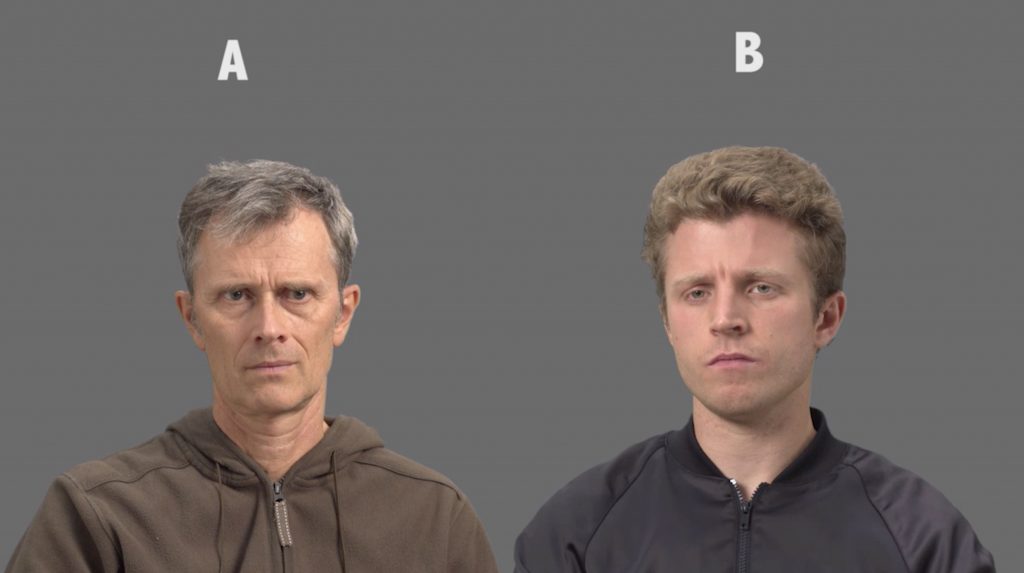

Images: Two examples of the recordings of a dyadic realtime setting where the two actors are seated in front of a Green Screen in the ZHdK IPF Film studio looking at each other through a display in front of them directly connected to the camera in front of the other actor. The other actor takes the role of an asylum seeker’s interviewer (I), while the other actor plays the role of an asylum seeker (AS). Both are listening to the dramatised background story of the latter while engaged in evaluation of each others emotional state within the dramatised context. The performances are applied to humanlike virtual characters in the project Booth developed at the Enactive Virtuality Lab. Actors (upper row) Dr. Gunter Lösel [AS] and Tim Woody Haake [I]; (row below) Corinne Soland [I] and Samuel Braun [AS]. Images©IPF courtesy of Dr. Rey and Miriam Loertscher from ZHdK research group.

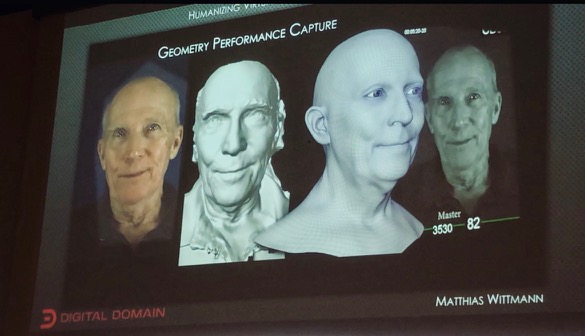

Images: Derek Bradley, Walt Disney Research Studio Zürich (above) and Matthias Wittmann, Digital Domain (below)

Industry engagement: Derek Bradley, Walt Disney Research Studio Zürich was one of the enactive experienters of facing Adam B in the State of Darkness. Here with Pia Tikka and Victor Pardinho (Sense of Space, Finland).

Industry engagement: Derek Bradley, Walt Disney Research Studio Zürich was one of the enactive experienters of facing Adam B in the State of Darkness. Here with Pia Tikka and Victor Pardinho (Sense of Space, Finland).

VR installation The State of Darkness exhibited at the ZHdK Sept 28–30.

Master’s Class by Victor Pardinho at the ZHdK September 28,2019

Topic:

In this workshop, the author will introduce a framework for virtual characters based on the volumetric scanning of real actors and 3D real-time engines. Participants will have an opportunity to check the system used at “The State of Darkness”, a biosensor driven VR art installation where human and non-human narratives coexist. The structure of the meeting is flexible and can be adjusted accordingly to the participant’s interests. We will discuss topics such as 3D asset workflows, real-time engines, volumetric capture and the design and production of XR experiences.

With Piotr Winiewicz (in image) and Mads Damsbo at the VRHAM! (Virtual Reality & Arts Festival Hamburg), the Germany’s first international Festival for Virtual Reality Art. Established by Ulrich Schrauth in Hamburg in 2018, VRHAM! opened its doors for the second time, from 7 – 15 June 2019, in Hamburg’s Oberhafenquartier. Under the year’s motto “DIS:SOLUTION” a large selection of extraordinary Virtual Reality experiences and live performances by contemporary artists was presented.

Image: Cinematographer and my doctoral student Sampsa Huttunen (University of Helsinki) exploring the Augmented Reality work by–

Experiencing 0AR communally via three connected devices, audiences are encouraged to explore, move around the space and interact where their actions have a unique influence within the performance. 0AR is based on seminal masterpiece zero degrees (2005), a collaboration between dancers/choreographers Akram Khan, Sidi Larbi Cherkaoui, sculptor Antony Gormley and composer Nitin Sawhney.

Who are humans in the age of technology? What role do they play and how do they interact with the machines that surround them? These types of questions were already negotiated at the Bauhaus 100 years ago. DAS TOTALE TANZ THEATER frames these questions today in the context of the development of artificial intelligence by staging a virtual reality dance experience.

At an unprecedented speed faster than the extinction of most endangered species, we are losing our linguistic diversity—and the very means by which we know ourselves. This immersive oratorio is an invocation of the languages that have gone extinct and an incantation of those that are endangered.

From 1899 to 1926, Claude Monet painted more than 250 scenes devoted to the water lily theme, which became what he himself called “an obsession.” This contemplative VR experience invites the user on a sensory journey starting off in Claude Monet’s garden, stopping along the way at the workshop of the artist and ending in the exhibition rooms of the Orangerie Museum.

Vestige is a room-scale VR creative documentary that uses multi-narrative and volumetric live capture to take the viewer on a journey into the mind of Lisa as she remembers her lost love, Erik.

The State of Darkness is exhibited at BLUE HOUR of the Prague Quadrennial’s 36Q˚ June 8-16, 2019.

Enactive Virtuality Lab is presented by associated team members Tanja Bastamow (Virtual Cinema Lab, Aalto ARTS) and Victor Pardinho (Sense of Space Oy). Biosensor adaptation for the event by Ilkka Kosunen.

Prague Quadrennial’s 36Q˚ (pronounced “threesixty”) presents the artistic and technical side of performance design concerned with creation of active, sensorial and predominantly nontangible ironments. Just like a performer, these emotionally charged environments follow a certain dramatic structure, change and evolve in time and invite our visitors to immerse themselves in a new experience.

WORKSHOPS, MASTERCLASSES

Curated by Markéta Fantová and Jan K. Rolník

8 – 16 June

Small Sports Hall

Our global society seems to be obsessed with fast paced progress of technology and elevates rational intellectual and scientific pursuits above arts that are intuitive and visceral in their nature. And yet creative minds based in the arts are proving that the boundless imagination paired with new technological advancements often result in original and highly inspiring mind-expanding projects. Even though performance design doesn’t need to use modern technology and is often the most inspiring when it uses simple human interaction, we need to explore and experiment with wide range of possibilities new technologies have to offer. PQ Artistic Director Marketa Fantova established 36Q˚ with those thoughts in mind and with a focus on the young, emerging generation of creatives.

Blue Hour

An experimental, interactive environment that fills the entire space of the Industrial Palace Sports Arena will welcome visitors on 8 June and remain open until the end of PQ 2019. The project, based on intensive team work that brings together experienced artists with emerging designers to collaboratively create, will be led by renowned French visual new media artist Romain Tardy. The curatorial team seeks to experiment with the shifting boundaries between the “non-material” or “virtual” and the “real” world, to explore the capacity of performance design to enlist technology in cultural production.

See more here

TIME Dec 15, Noon

LOCATION SuperNova Kino, room 406, 4th floor, Narva mnt 27

An inspiring EEVR community event organised by MEDIT, including presentations, vivid discussions, technical and artistic demos with highlights by visiting Finnish media artist Hanna Haaslahti (middle) and producer Marko Tandefelt (right).

Announcement by Madis Vasser:

EEVR #21 will once again find itself in familiar territory on the fourth floor of the BFM school in Tallinn, but this time around our host is MEDIT – TLU Center of Excellence in Media Innovation and Digital Culture. We’ll be mixing film, photogrammetry, and some very interesting hardware. Everyone interested in VR/AR are very welcome! The event is free, but do click the attend button early if you plan to show up! Go to FB.

On the schedule:

* Hanna Haaslahti (http://www.hannahaaslahti.net/) – some cool photogrammetry projects

* Madis Krisman & Johannes Kruusma (Avar.ee) – some more cool photogrammetry projects

* Rein Zobel (MaruVR.ee) – VR Days 2018 recap etc

Demos:

* State of Darkness VR – Enactive Virtuality Research Group

* Magic Leap (curtesy of https://www.operose.io/)

* “Hands-on” with some prototype hardware (top secret)

Captured is a narrative simulation about social injustice where your digital double has a role to play. In the installation, people are captured as 3D Avatars who become actors in a scenario where individual freedom is taken over by collective instincts.

Team

Hanna Haaslahti is a Finnish media artist working with ideas from technological theater, expanded image and interaction. She holds MFA from Medialab in University of Arts and Design Helsinki (Aalto). Currently Hanna Haaslahti lives and works in Helsinki. She has been artist-in-residence at MagicMediaLab, Brussels (2000), Nifca NewMediaAir, St.Petersburg (2003), Cité International des Arts, Paris (2008) and SculptureShock organized by Royal British Society of Sculptors, London (2015). She has received honorary mention at Vida 6.0 Art and artificial life-competition (2003) and was selected in 50 best category in ZKM Medien Kunst Preis (2003). She has received the most prestigious Finnish media art award, AVEK-award (2005).

Marko Tandefelt is a Helsinki based concept designer, educator and musician with extensive experience in art, design, media and technology fields. Among his interests are: Concept design, sensorbased interface prototyping, immersive multisensory cinema, and experimental visualization systems.

Marko has lived in New York for 20+ years, working at companies such as NEC R&D Labs, ESIDesign, Antennadesig and the Finnish Cultural Institute. During 2007-2015 Marko worked as the Director of Technology & Research/Senior Technology Manager at Eyebeam Art & Technology Center. Marko taught Masters Thesis courses at Parsons School of Design MFADT program in New York from 2001 til 2016.

In his native Helsinki Finland, Marko has worked since 2016 as a Technology Consultant and Producer in various interactive projects, including Hanna Haaslahti’s realtime 3D Body scanning installation system “Captured”. Marko works currently at Kunstventures as a media art producer, concept designer and prototyper.

Marko holds a B.M. degree Summa Cum Laude in Music Technology from NYU, and a Master’s degree from NYU Tisch School of the Arts Film & TV School Interactive Telecommunications Program ITP. He is a longtime member of ACM, AES, IEEE, SIGGRAPH, and SMPTE, and has worked as a paper reader and jury member for SIGGRAPH and ACE conferences.

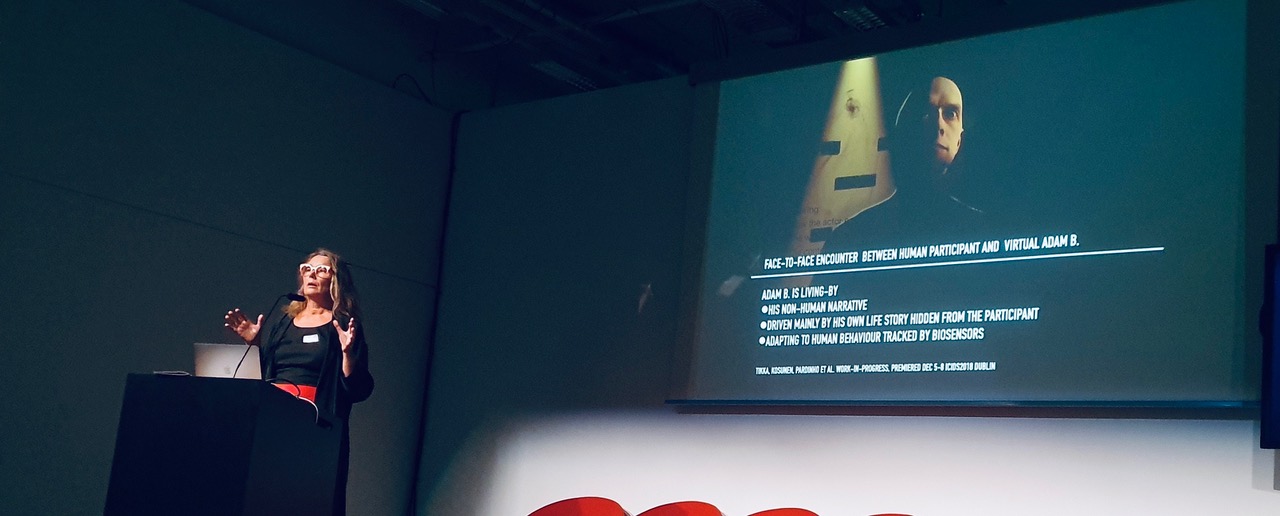

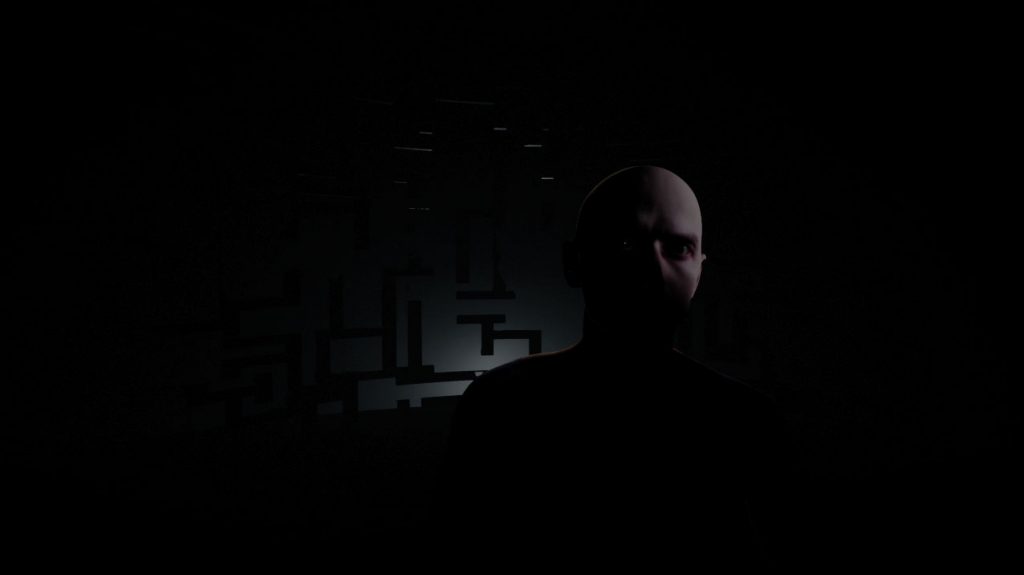

In the VR-mediated experience of the State of Darkness the participant will meet face-to-face with a humanlike artificial character in immersive narrative context. See ICIDS2018 Art Expo catalogue here

Human mind and culture rely on narratives people live by every day, narratives they tell to one another, narratives that allow them to learn from others, for instance, in movies, books, or social media. Yet, the State of Darkness connects the notion of non-human narratives to the stories experienced by our virtual character, Adam B. Trained by an exhaustive range of human facial repertoire, Adam B has gained access to control his facial expressions when encountering with humans.

Our concept builds on the idea of a symbiotic interactive co-presence of a human and non-human. Adam B will be experiencing his own non-human narrative that draws to some extent from the behavior of the participant, yet driven mainly by Adam B’s own life story hidden from the participant, emerging within the complexity of Adam B’s algorithmic mind. The State of Darkness is an art installation where human and non-human narratives coexist, the first experienced and lived-by our participant and the latter experienced by our artificial character Adam B, as they meet face-to-face, embedded in the narrative world of the State of Darkness.

Above: Janet H. Murray meeting face-to-face our Adam B. at ICIDS2018 Art Expo, Trinity College Science Gallery, Dublin (Nov 5)

Image above: Enactive Scenographer Tanja Bastamow testing Installation at ICIDS2018 Trinity College, Science Gallery, a day before opening of Art Exhibition Dec 5.

Team

Idea, Concept, Director Pia Tikka; Script & Dramaturgical supervision Eeva R Tikka; Enactive Character design and production pipeline design Victor Pardinho; Enactive Scenography Tanja Bastamow; Sound design Can Uzer; Sound design II Iga Gerolin; Technical 3D Artist Maija Paavola; Symbiotic Creativity Ilkka Kosunen; Machine learning consultation Paul Wagner; Unreal engine consultation Ats Kurvet.

Team funding

Finnish Cultural Foundation Huhtamäki Fund; Virtual Cinema Lab Aalto University School of ARTS, ; Digidemo Promotion Center of Audiovisual Culture with Oblomovies Oy; VR equipment by Creative Lab, the Center of Excellence in Media Innovation and Digital Culture & Empatica4 biotracking by Digital Technology Insitute, Tallinn University; Tikka & Kosunen: EU Mobilitas Pluss Top Researcher Grant (2017-2022), Estonian Research Council in association with Tallinn University.

For more information, contact: piatikka@tlu.ee

The International Conference on Interactive Digital Storytelling ICIDS 2018 5-8 December 2018, Trinity College Dublin, Ireland. The State of Darkness VR-installation premiered in the ICIDS 2018 Art Exhibition, a platform for artists to explore digital media for interactive storytelling from the perspective of a particular curatorial theme: Non- Human Narratives. See https://icids2018.scss.tcd.ie

TRISOLDE – Neuroadaptive Gesamtkunstwerk: The Biocybernetic Symbiosis of Tristan and Isolde”

Exploring the final frontier of human-computer interaction with a neuroadaptive opera…performed by the audience, dancers and computational creativity .

Team of “TRISOLDE” (Tiina Ollesk, Simo Kruusement, Renee Nõmmik, Ilkka Kosunen, Hans-Günther Lock, Giovanni Albini) performed in Festival “IndepenDance” in Göteborg, nov 29 and Dec 2, 2019.

A symbiotic dance version of Wagner´s “Tristan and Isolde” where dancers are controlling the music via body movements and implicit psychophysiological signals. This work explores the next step in this coming-together of man and machine: the symbiotic interaction paradigm where the computer can automatically sense the cognitive and affective state of the user and adapt appropriately in real-time. It brings together many exciting fields of research from computational creativity to physiological computing. To measure audience and to use the audience’s reactions to module the orchestra is new way of doing “participatory theatre” where audience becomes part of the performance.

“Tristan and Isolde” is widely considered both as one of the greatest operas of all time as well as beginning of modernism in music, introducing techniques such as chromaticism dissonance and even atonality. It has sometimes been described as a “symphony with words”; the opera lacks major stage action, large choruses or wide range of characters. Most of the “action” in the opera happens inside the heads of Tristan and Isolde. This provides amazing possibilities for a biocybernetic system: I this case, Tristan and Isolde will communicate both explicitly (through movement of the dancers) but also implicitly via the measured psychophysiological signals.

Dance artists: Tiina Ollesk, Simo Kruusement

Choreographer-director: Renee Nõmmik

Dramaturgy and science of biocybernetic symbiosis: Ilkka Kosunen

Composers for interactive audio media: Giovanni Albini, Hans-Gunter Lock

Video interaction: Valentin Siltsenko

Duration: 40’

This performance is supported by: The Cultural Endowment of Estonia, and Enactive Virtuality Lab and Digital Technology Insitute (biosensors), Tallinn University.

Presentation of project: November 29th-30th and December 1st, 2018 at 3:e Våningen Göteborg (Sweden) at festival Independance. The event is dedicated to the centenary of the Republic of Estonia and supported by program “Estonia100-EV100”.

PREMIER IN TALLINN FEBRUARY 2019 (see more Fine 5 Theater)

Enactive Virtuality Lab presented the collaborative research with the Brain and Mind Lab of the Aalto School of Science at the Worlding the Brain Conference in Aarhus University, Nov 27-29.

TITLE: Narrative priming of moral judgments in film viewing

Authors: Pia Tikka, Jenni Hannukainen, Tommi Himberg, and Mikko Sams

In collaboration with BeAnotherLab (The Machine to Be Another), Lynda Joy Gerry taught a workshop, “Embodying Creative Expertise in Virtual Reality” to Masters in Interaction Design students at Zürcher Hochschule der Künste (ZhDK), as part of a course, “Ecological perception, embodiment, and behavioral change in immersive design” led by BAL members.

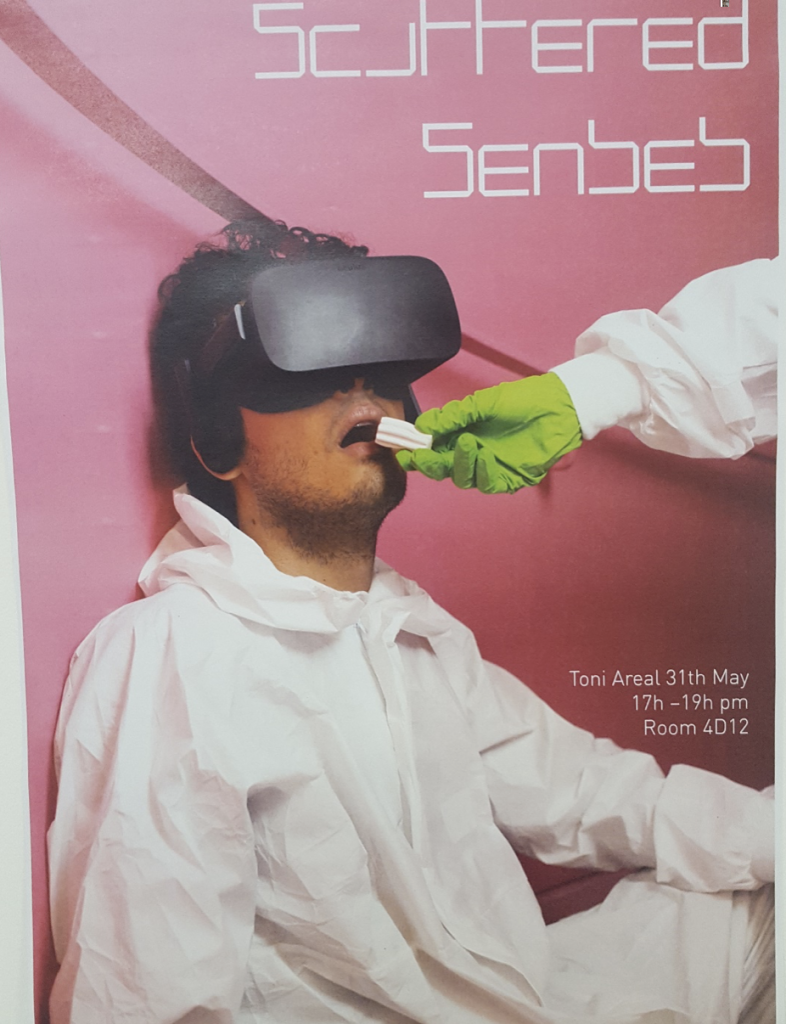

Image: Poster for the students’ final project presentation and exhibition.

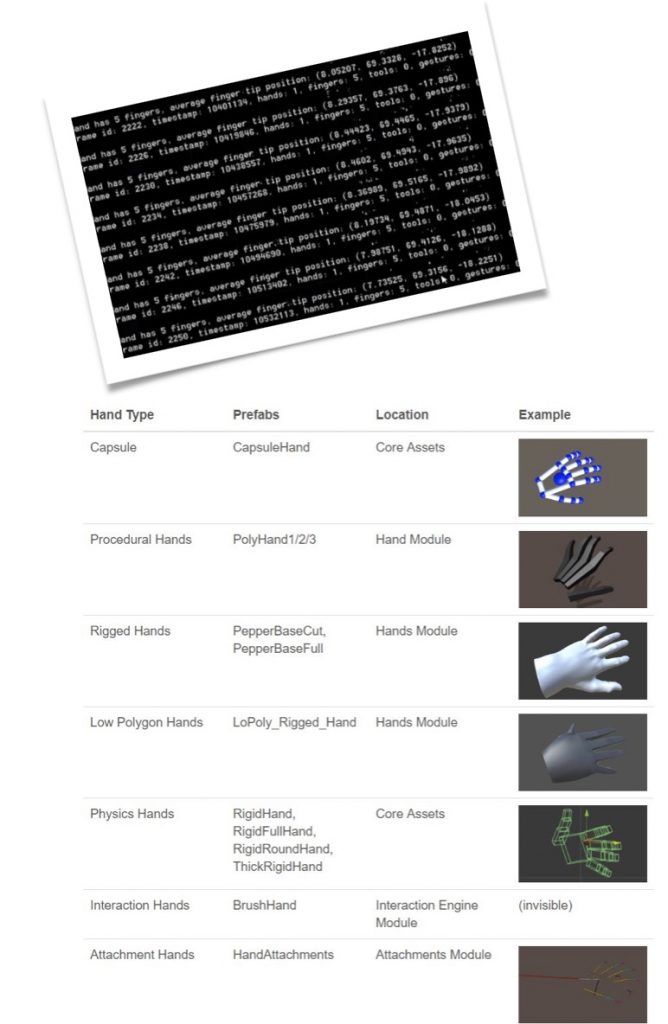

Lynda specifically taught students design approaches using a semi-transparent video overlay of another person’s first-person, embodied experience, as in First-Person Squared. The focus of the workshop was on Leap Motion data tracking and measurements, specifically how to calculate compatibility and interpersonal motor coordination through a match score between the two participants, and how to send this data over a network. The system provides motor feedback regarding imitative gestures that are similar in form and position, and also for gestures that occur synchronously (at the same time), ideally trying to support both types of interpersonal motor coordination. Lynda taught students the equations used and data input necessary to calculate this algorithm for the different match scores, and also how to add interaction effects to this data. Lynda showed students how to implement Leap motion hand tracking on top of stereoscopic point-of-view video and how to record user hand movements. On the 31st, students premiered their final projects at an event entitled “Scattered Senses.”